Discover Doom Debates

Doom Debates

Doom Debates

Author: Liron Shapira

Subscribed: 32Played: 1,341Subscribe

Share

© Liron Shapira

Description

123 Episodes

Reverse

Devin Elliot is a former pro snowboarder turned software engineer who has logged thousands of hours building AI systems. His P(Doom) is a flat ⚫. He argues that worrying about an AI takeover is as irrational as fearing your car will sprout wings and fly away.We spar over the hard limits of current models: Devin insists LLMs are hitting a wall, relying entirely on external software “wrappers” to feign intelligence. I push back, arguing that raw models are already demonstrating native reasoning and algorithmic capabilities.Devin also argues for decentralization by claiming that nuclear proliferation is safer than centralized control.We end on a massive timeline split: I see superintelligence in a decade, while he believes we’re a thousand years away from being able to “grow” computers that are truly intelligence.Timestamps00:00:00 Episode Preview00:01:03 Intro: Snowboarder to Coder00:03:30 "I Do Not Have a P(Doom)"00:06:47 Nuclear Proliferation & Centralized Control00:10:11 The "Spotify Quality" House Analogy00:17:15 Ideal Geopolitics: Decentralized Power00:25:22 Why AI Can't "Fly Away"00:28:20 The Long Addition Test: Native or Tool?00:38:26 Is Non-Determinism a Feature or a Bug?00:52:01 The Impossibility of Mind Uploading00:57:46 "Growing" Computers from Cells01:02:52 Timelines: 10 Years vs. 1,000 Years01:11:40 "Plastic Bag Ghosts" & Builder Intuition01:13:17 Summary of the Debate01:15:30 Closing ThoughtsLinksDevin’s Twitter — https://x.com/devinjelliot---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Dr. Michael Timothy Bennett, Ph.D, is an award-winning young researcher who has developed a new formal framework for understanding intelligence. He has a TINY P(Doom) because he claims superintelligence will be resource-constrained and tend toward cooperation.In this lively debate, I stress-test Michael’s framework and debate whether its theorized constraints will actually hold back superintelligent AI.Timestamps* 00:00 Trailer* 01:41 Introducing Michael Timothy Bennett* 04:33 What’s Your P(Doom)?™* 10:51 Michael’s Thesis on Intelligence: “Abstraction Layers”, “Adaptation”, “Resource Efficiency”* 25:36 Debate: Is Einstein Smarter Than a Rock?* 39:07 “Embodiment”: Michael’s Unconventional Computation Theory vs Standard Computation* 48:28 “W-Maxing”: Michael’s Intelligence Framework vs. a Goal-Oriented Framework* 59:47 Debating AI Doom* 1:09:49 Debating Instrumental Convergence* 1:24:00 Where Do You Get Off The Doom Train™ — Identifying The Cruxes of Disagreement* 1:44:13 Debating AGI Timelines* 1:49:10 Final RecapLinksMichael’s website — https://michaeltimothybennett.comMichael’s Twitter — https://x.com/MiTiBennettMichael’s latest paper, “How To Build Conscious Machines” — https://osf.io/preprints/thesiscommons/wehmg_v1?view_onlyDoom Debates' Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

My guest today achieved something EXTREMELY rare and impressive: Coming onto my show with an AI optimist position, then admitting he hadn’t thought of my counterarguments before, and updating his beliefs in realtime! Also, he won the 2013 Nobel Prize in computational biology.I’m thrilled that Prof. Levitt understands the value of raising awareness about imminent extinction risk from superintelligent AI, and the value of debate as a tool to uncover the truth — the dual missions of Doom Debates!Timestamps0:00 — Trailer1:18 — Introducing Michael Levitt4:20 — The Evolution of Computing and AI12:42 — Measuring Intelligence: Humans vs. AI23:11 — The AI Doom Argument: Steering the Future25:01 — Optimism, Pessimism, and Other Existential Risks34:15 — What’s Your P(Doom)™36:16 — Warning Shots and Global Regulation55:28 — Comparing AI Risk to Pandemics and Nuclear War1:01:49 — Wrap-Up1:06:11 — Outro + New AI safety resourceShow NotesMichael Levitt’s Twitter — https://x.com/MLevitt_NP2013-- Get full access to Doom Debates at lironshapira.substack.com/subscribe

Michael Ellsberg, son of the legendary Pentagon Papers leaker Daniel Ellsberg, joins me to discuss the chilling parallels between his father’s nuclear war warnings and today’s race to AGI.We discuss Michael’s 99% probability of doom, his personal experience being “obsoleted” by AI, and the urgent moral duty for insiders to blow the whistle on AI’s outsize risks.Timestamps0:00 Intro1:29 Introducing Michael Ellsberg, His Father Daniel Ellsberg, and The Pentagon Papers5:49 Vietnam War Parallels to AI: Lies and Escalation25:23 The Doomsday Machine & Nuclear Insanity48:49 Mutually Assured Destruction vs. Superintelligence Risk55:10 Evolutionary Dynamics: Replicators and the End of the “Dream Time”1:10:17 What’s Your P(doom)?™1:14:49 Debating P(Doom) Disagreements1:26:18 AI Unemployment Doom1:39:14 Doom Psychology: How to Cope with Existential Risk1:50:56 The “Joyless Singularity”: Aligned AI Might Still Freeze Humanity2:09:00 A Call to Action for AI InsidersShow Notes:Michael Ellsberg’s website — https://www.ellsberg.com/Michael’s Twitter — https://x.com/MichaelEllsbergDaniel Ellsberg’s website — https://www.ellsberg.net/The upcoming book, “Truth and Consequence” — https://geni.us/truthandconsequenceMichael’s AI-related substack “Mammalian Wetware” — https://mammalianwetware.substack.com/Daniel’s debate with Bill Kristol in the run-up to the Iraq war — https://www.youtube.com/watch?v=HyvsDR3xnAg--Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Today's Debate: Should we ban the development of artificial superintelligence until scientists agree it is safe and controllable?Arguing FOR banning superintelligence until there’s a scientific consensus that it’ll be done safely and controllably and with strong public buy-in: Max Tegmark. He is an MIT professor, bestselling author, and co-founder of the Future of Life Institute whose research has focused on artificial intelligence for the past 8 years.Arguing AGAINST banning superintelligent AI development: Dean Ball. He is a Senior Fellow at the Foundation for American Innovation who served as a Senior Policy Advisor at the White House Office of Science and Technology Policy under President Trump, where he helped craft America’s AI Action Plan.Two of the leading voices on AI policy engaged in high-quality, high-stakes debate for the benefit of the public!This is why I got into the podcast game — because I believe debate is an essential tool for humanity to reckon with the creation of superhuman thinking machines.Timestamps0:00 - Episode Preview1:41 - Introducing The Debate3:38 - Max Tegmark’s Opening Statement5:20 - Dean Ball’s Opening Statement9:01 - Designing an “FDA for AI” and Safety Standards21:10 - Liability, Tail Risk, and Biosecurity29:11 - Incremental Regulation, Timelines, and AI Capabilities54:01 - Max’s Nightmare Scenario57:36 - The Risks of Recursive Self‑Improvement1:08:24 - What’s Your P(Doom)?™1:13:42 - National Security, China, and the AI Race1:32:35 - Closing Statements1:44:00 - Post‑Debate Recap and Call to ActionShow NotesStatement on Superintelligence released by Max’s organization, the Future of Life Institute — https://superintelligence-statement.org/Dean’s reaction to the Statement on Superintelligence — https://x.com/deanwball/status/1980975802570174831America’s AI Action Plan — https://www.whitehouse.gov/articles/2025/07/white-house-unveils-americas-ai-action-plan/“A Definition of AGI” by Dan Hendrycks, Max Tegmark, et. al. —https://www.agidefinition.ai/Max Tegmark’s Twitter — https://x.com/tegmarkDean Ball’s Twitter — https://x.com/deanwball Get full access to Doom Debates at lironshapira.substack.com/subscribe

Max Harms and Jeremy Gillen are current and former MIRI researchers who both see superintelligent AI as an imminent extinction threat.But they disagree on whether it’s worthwhile to try to aim for obedient, “corrigible” AI as a singular target for current alignment efforts.Max thinks corrigibility is the most plausible path to build ASI without losing control and dying, while Jeremy is skeptical that this research path will yield better superintelligent AI behavior on a sufficiently early try.By listening to this debate, you’ll find out if AI corrigibility is a relatively promising effort that might prevent imminent human extinction, or an over-optimistic pipe dream.Timestamps0:00 — Episode Preview1:18 — Debate Kickoff3:22 — What is Corrigibility?9:57 — Why Corrigibility Matters11:41 — What’s Your P(Doom)™16:10 — Max’s Case for Corrigibility19:28 — Jeremy’s Case Against Corrigibility21:57 — Max’s Mainline AI Scenario28:51 — 4 Strategies: Alignment, Control, Corrigibility, Don’t Build It37:00 — Corrigibility vs HHH (”Helpful, Harmless, Honest”)44:43 — Asimov’s 3 Laws of Robotics52:05 — Is Corrigibility a Coherent Concept?1:03:32 — Corrigibility vs Shutdown-ability1:09:59 — CAST: Corrigibility as Singular Target, Near Misses, Iterations1:20:18 — Debating if Max is Over-Optimistic1:34:06 — Debating if Corrigibility is the Best Target1:38:57 — Would Max Work for Anthropic?1:41:26 — Max’s Modest Hopes1:58:00 — Max’s New Book: Red Heart2:16:08 — OutroShow NotesMax’s book Red Heart — https://www.amazon.com/Red-Heart-Max-Harms/dp/108822119XLearn more about CAST: Corrigibility as Singular Target — https://www.lesswrong.com/s/KfCjeconYRdFbMxsy/p/NQK8KHSrZRF5erTbaMax’s Twitter — https://x.com/raelifinJeremy’s Twitter — https://x.com/jeremygillen1---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Holly Elmore leads protests against frontier AI labs, and that work has strained some of her closest relationships in the AI-safety community.She says AI safety insiders care more about their reputation in tech circles than actually lowering AI x-risk. This is our full conversation from my “If Anyone Builds It, Everyone Dies” unofficial launch party livestream on Sept 16, 2025.Timestamps0:00 Intro1:06 Holly’s Background and The Current Activities of PauseAI US4:41 The Circular Firing Squad Problem of AI Safety7:23 Why the AI Safety Community Resists Public Advocacy11:37 Breaking with Former Allies at AI Labs13:00 LessWrong’s reaction to Eliezer’s public turnShow NotesPauseAI US — https://pauseai-us.orgInternational PauseAI — https://pauseai.infoHolly’s Twitter — https://x.com/ilex_ulmusHolly’s Substack: https://substack.com/@hollyelmoreHolly’s post covering how AI isn’t another “technology”: https://hollyelmore.substack.com/p/the-technology-bucket-errorRelated EpisodesHolly and I dive into the rationalist community’s failure to rally behind a cause: https://lironshapira.substack.com/p/lesswrong-circular-firing-squadThe full IABED livestream: https://lironshapira.substack.com/p/if-anyone-builds-it-everyone-dies-party Get full access to Doom Debates at lironshapira.substack.com/subscribe

Sparks fly in the finale of my series with ex-MIRI researcher Tsvi Benson-Tilsen as we debate his AGI timelines.Tsvi is a champion of using germline engineering to create smarter humans who can solve AI alignment.I support the approach, even though I’m skeptical it’ll gain much traction before AGI arrives.Timestamps0:00 Debate Preview0:57 Tsvi’s AGI Timeline Prediction 3:03 The Least Impressive Task AI Cannot Do In 2 years6:13 Proposed Task: Solve Cantor’s Theorem From Scratch 8:20 AI Has Limitations Related to Sample Complexity 11:41 We Need Clear Goalposts for Better AGI Predictions 13:19 Counterargument: LLMs May Not Be a Path to AGI16:01 Is Tsvi Setting a High Bar for Progress Towards AGI? 19:17 AI Models Are Missing A Spark of Creativity28:17 Liron’s “Black Box” AGI Test 32:09 Are We Going to Enter an AI Winter? 35:09 Who Is Being Overconfident? 42:11 If AI Makes Progress on Benchmarks, Would Tsvi Shorten His Timeline? 50:34 Recap & Tsvi’s ResearchShow NotesLearn more about Tsvi’s organization, the Berkeley Genomics Project — https://berkeleygenomics.orgDoom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Today I’m sharing my AI doom interview on Donal O’Doherty’s podcast.I lay out the case for having a 50% p(doom). Then Donal plays devil’s advocate and tees up every major objection the accelerationists throw at doomers. See if the anti-doom arguments hold up, or if the AI boosters are just serving sophisticated cope.Timestamps0:00 — Introduction & Liron’s Background 1:29 — Liron’s Worldview: 50% Chance of AI Annihilation 4:03 — Rationalists, Effective Altruists, & AI Developers 5:49 — Major Sources of AI Risk 8:25 — The Alignment Problem 10:08 — AGI Timelines 16:37 — Will We Face an Intelligence Explosion? 29:29 — Debunking AI Doom Counterarguments 1:03:16 — Regulation, Policy, and Surviving The Future With AIShow NotesIf you liked this episode, subscribe to the Collective Wisdom Podcast for more deeply researched AI interviews: https://www.youtube.com/@DonalODoherty Transcript Introduction & Liron’s BackgroundDonal O’Doherty 00:00:00Today I’m speaking with Liron Shapira. Liron is an investor, he’s an entrepreneur, he’s a rationalist, and he also has a popular podcast called Doom Debates, where he debates some of the greatest minds from different fields on the potential of AI risk.Liron considers himself a doomer, which means he worries that artificial intelligence, if it gets to superintelligence level, could threaten the integrity of the world and the human species.Donal 00:00:24Enjoy my conversation with Liron Shapira.Donal 00:00:30Liron, welcome. So let’s just begin. Will you tell us a little bit about yourself and your background, please? I will have introduced you, but I just want everyone to know a bit about you.Liron Shapira 00:00:39Hey, I’m Liron Shapira. I’m the host of Doom Debates, which is a YouTube show and podcast where I bring in luminaries on all sides of the AI doom argument.Liron 00:00:49People who think we are doomed, people who think we’re not doomed, and we hash it out. We try to figure out whether we’re doomed. I myself am a longtime AI doomer. I started reading Yudkowsky in 2007, so it’s been 18 years for me being worried about doom from artificial intelligence.My background is I’m a computer science bachelor’s from UC Berkeley.Liron 00:01:10I’ve worked as a software engineer and an entrepreneur. I’ve done a Y Combinator startup, so I love tech. I’m deep in tech. I’m deep in computer science, and I’m deep into believing the AI doom argument.I don’t see how we’re going to survive building superintelligent AI. And so I’m happy to talk to anybody who will listen. So thank you for having me on, Donal.Donal 00:01:27It’s an absolute pleasure.Liron’s Worldview: 50% Chance of AI AnnihilationDonal 00:01:29Okay, so a lot of people where I come from won’t be familiar with doomism or what a doomer is. So will you just talk through, and I’m very interested in this for personal reasons as well, your epistemic and philosophical inspirations here. How did you reach these conclusions?Liron 00:01:45So I often call myself a Yudkowskian, in reference to Eliezer Yudkowsky, because I agree with 95% of what he writes, the Less Wrong corpus. I don’t expect everybody to get up to speed with it because it really takes a thousand hours to absorb it all.I don’t think that it’s essential to spend those thousand hours.Liron 00:02:02I think that it is something that you can get in a soundbite, not a soundbite, but in a one-hour long interview or whatever. So yeah, I think you mentioned epistemic roots or whatever, right? So I am a Bayesian, meaning I think you can put probabilities on things the way prediction markets are doing.Liron 00:02:16You know, they ask, oh, what’s the chance that this war is going to end? Or this war is going to start, right? What’s the chance that this is going to happen in this sports game? And some people will tell you, you can’t reason like that.Whereas prediction markets are like, well, the market says there’s a 70% chance, and what do you know? It happens 70% of the time. So is that what you’re getting at when you talk about my epistemics?Donal 00:02:35Yeah, exactly. Yeah. And I guess I’m very curious as well about, so what Yudkowsky does is he conducts thought experiments. Because obviously some things can’t be tested, we know they might be true, but they can’t be tested in experiments.Donal 00:02:49So I’m just curious about the role of philosophical thought experiments or maybe trans-science approaches, in terms of testing questions that we can’t actually conduct experiments on.Liron 00:03:00Oh, got it. Yeah. I mean this idea of what can and can’t be tested. I mean, tests are nice, but they’re not the only way to do science and to do productive reasoning.Liron 00:03:10There are times when you just have to do your best without a perfect test. You know, a recent example was the James Webb Space Telescope, right? It’s the successor to the Hubble Space Telescope. It worked really well, but it had to get into this really difficult orbit.This very interesting Lagrange point, I think in the solar system, they had to get it there and they had to unfold it.Liron 00:03:30It was this really compact design and insanely complicated thing, and it had to all work perfectly on the first try. So you know, you can test it on earth, but earth isn’t the same thing as space.So my point is just that as a human, as a fallible human with a limited brain, it turns out there’s things you can do with your brain that still help you know the truth about the future, even when you can’t do a perfect clone of an experiment of the future.Liron 00:03:52And so to connect that to the AI discussion, I think we know enough to be extremely worried about superintelligent AI. Even though there is not in fact a superintelligent AI in front of us right now.Donal 00:04:03Interesting.Rationalists, Effective Altruists, & AI DevelopersDonal 00:04:03And just before we proceed, will you talk a little bit about the EA community and the rationalist community as well? Because a lot of people won’t have heard of those terms where I come from.Liron 00:04:13Yes. So I did mention Eliezer Yudkowsky, who’s kind of the godfather of thinking about AI safety. He was also the father of the modern rationality community. It started around 2007 when he was online blogging at a site called Overcoming Bias, and then he was blogging on his own site called Less Wrong.And he wrote The Less Wrong Sequences and a community formed around him that also included previous rationalists, like Carl Feynman, the son of Richard Feynman.Liron 00:04:37So this community kind of gathered together. It had its origins in Usenet and all that, and it’s been going now for 18 years. There’s also the Center for Applied Rationality that’s part of the community.There’s also the effective altruism community that you’ve heard of. You know, they try to optimize charity and that’s kind of an offshoot of the rationality community.Liron 00:04:53And now the modern AI community, funny enough, is pretty closely tied into the rationality community from my perspective. I’ve just been interested to use my brain rationally. What is the art of rationality? Right? We throw this term around, people think of Mr. Spock from Star Trek, hyper-rational.Oh captain, you know, logic says you must do this.Liron 00:05:12People think of rationality as being kind of weird and nerdy, but we take a broader view of rationality where it’s like, listen, you have this tool, you have this brain in your head. You’re trying to use the brain in your head to get results.The James Webb Space Telescope, that is an amazing success story where a lot of people use their brains very effectively, even better than Spock in Star Trek.Liron 00:05:30That took moxie, right? That took navigating bureaucracy, thinking about contingencies. It wasn’t a purely logical matter, but whatever it was, it was a bunch of people using their brains, squeezing the juice out of their brains to get results.Basically, that’s kind of broadly construed what we rationalists are trying to do.Donal 00:05:49Okay. Fascinating.Major Sources of AI RiskDonal 00:05:49So let’s just quickly lay out the major sources of AI risk. So you could have misuse, so things like bioterror, you could have arms race dynamics. You could also have organizational failures, and then you have rogue AI.So are you principally concerned about rogue AI? Are you also concerned about the other ones on the potential path to having rogue AI?Liron 00:06:11My personal biggest concern is rogue AI. The way I see it, you know, different people think different parts of the problem are bigger. The way I see it, this brain in our head, it’s very impressive. It’s a two-pound piece of meat, right? Piece of fatty cells, or you know, neuron cells.Liron 00:06:27It’s pretty amazing, but it’s going to get surpassed, you know, the same way that manmade airplanes have surpassed birds. You know? Yeah. A bird’s wing, it’s a marvelous thing. Okay, great. But if you want to fly at Mach 5 or whatever, the bird is just not even in the running to do that. Right?And the earth, the atmosphere of the earth allows for flying at 5 or 10 times the speed of sound.Liron 00:06:45You know, this 5,000 mile thick atmosphere that we have, it could potentially support supersonic flight. A bird can’t do it. A human engineer sitting in a room with a pencil can design something that can fly at Mach 5 and then like manufacture that.So the point is, the human brain has superpowers. The human brain, this lump of flesh, this meat, is way more powerful than what a bird can do.Liron 00:07:02But the human brain is going to get surpassed. And so I think that once we’re surpassed, those other problems that you mentioned become less relevant because we just don’t have power anymore.There’s a new thing on the block that has power and we’re not it. Now before we’re surpassed, yeah, I mean, I guess there’s a couple years maybe before we’re surpassed.Liron 00:07:18During that time, I think that the other risks matter. Like, you know, c

Tsvi Benson-Tilsen spent seven years tackling the alignment problem at the Machine Intelligence Research Institute (MIRI). Now he delivers a sobering verdict: humanity has made “basically 0%” progress towards solving it. Tsvi unpacks foundational MIRI research insights like timeless decision theory and corrigibility, which expose just how little humanity actually knows about controlling superintelligence. These theoretical alignment concepts help us peer into the future, revealing the non-obvious, structural laws of “intellidynamics” that will ultimately determine our fate. Time to learn some of MIRI’s greatest hits.P.S. I also have a separate interview with Tsvi about his research into human augmentation: Watch here!Timestamps 0:00 — Episode Highlights 0:49 — Humanity Has Made 0% Progress on AI Alignment 1:56 — MIRI’s Greatest Hits: Reflective Probability Theory, Logical Uncertainty, Reflective Stability 6:56 — Why Superintelligence is So Hard to Align: Self-Modification 8:54 — AI Will Become a Utility Maximizer (Reflective Stability) 12:26 — The Effect of an “Ontological Crisis” on AI 14:41 — Why Modern AI Will Not Be ‘Aligned By Default’ 18:49 — Debate: Have LLMs Solved the “Ontological Crisis” Problem? 25:56 — MIRI Alignment Greatest Hit: Timeless Decision Theory 35:17 — MIRI Alignment Greatest Hit: Corrigibility 37:53 — No Known Solution for Corrigible and Reflectively Stable Superintelligence39:58 — RecapShow NotesStay tuned for part 3 of my interview with Tsvi where we debate AGI timelines! Learn more about Tsvi’s organization, the Berkeley Genomics Project: https://berkeleygenomics.orgWatch part 1 of my interview with Tsvi: TranscriptEpisode HighlightsTsvi Benson-Tilsen 00:00:00If humans really f*cked up, when we try to reach into the AI and correct it, the AI does not want humans to modify the core aspects of what it values.Liron Shapira 00:00:09This concept is very deep, very important. It’s almost MIRI in a nutshell. I feel like MIRI’s whole research program is noticing: hey, when we run the AI, we’re probably going to get a bunch of generations of thrashing. But that’s probably only after we’re all dead and things didn’t happen the way we wanted. I feel like that is what MIRI is trying to tell the world. Meanwhile, the world is like, “la la la, LLMs, reinforcement learning—it’s all good, it’s working great. Alignment by default.”Tsvi 00:00:34Yeah, that’s certainly how I view it.Humanity Has Made 0% Progress on AI Alignment Liron Shapira 00:00:46All right. I want to move on to talk about your MIRI research. I have a lot of respect for MIRI. A lot of viewers of the show appreciate MIRI’s contributions. I think it has made real major contributions in my opinion—most are on the side of showing how hard the alignment problem is, which is a great contribution. I think it worked to show that. My question for you is: having been at MIRI for seven and a half years, how are we doing on theories of AI alignment?Tsvi Benson-Tilsen 00:01:10I can’t speak with 100% authority because I’m not necessarily up to date on everything and there are lots of researchers and lots of controversy. But from my perspective, we are basically at 0%—at zero percent done figuring it out. Which is somewhat grim. Basically, there’s a bunch of fundamental challenges, and we don’t know how to grapple with these challenges. Furthermore, it’s sort of sociologically difficult to even put our attention towards grappling with those challenges, because they’re weirder problems—more pre-paradigmatic. It’s harder to coordinate multiple people to work on the same thing productively.It’s also harder to get funding for super blue-sky research. And the problems themselves are just slippery.MIRI Alignment Greatest Hits: Reflective Probability Theory, Logical Uncertainty, Reflective Stability Liron 00:01:55Okay, well, you were there for seven years, so how did you try to get us past zero?Tsvi 00:02:00Well, I would sort of vaguely (or coarsely) break up my time working at MIRI into two chunks. The first chunk is research programs that were pre-existing when I started: reflective probability theory and reflective decision theory. Basically, we were trying to understand the mathematical foundations of a mind that is reflecting on itself—thinking about itself and potentially modifying itself, changing itself. We wanted to think about a mind doing that, and then try to get some sort of fulcrum for understanding anything that’s stable about this mind.Something we could say about what this mind is doing and how it makes decisions—like how it decides how to affect the world—and have our description of the mind be stable even as the mind is changing in potentially radical ways.Liron 00:02:46Great. Okay. Let me try to translate some of that for the viewers here. So, MIRI has been the premier organization studying intelligence dynamics, and Eliezer Yudkowsky—especially—people on social media like to dunk on him and say he has no qualifications, he’s not even an AI expert. In my opinion, he’s actually good at AI, but yeah, sure. He’s not a top world expert at AI, sure. But I believe that Eliezer Yudkowsky is in fact a top world expert in the subject of intelligence dynamics. Is this reasonable so far, or do you want to disagree?Tsvi 00:03:15I think that’s fair so far.Liron 00:03:16Okay. And I think his research organization, MIRI, has done the only sustained program to even study intelligence dynamics—to ask the question, “Hey, let’s say there are arbitrarily smart agents. What should we expect them to do? What kind of principles do they operate on, just by virtue of being really intelligent?” Fair so far.Now, you mentioned a couple things. You mentioned reflective probability. From what I recall, it’s the idea that—well, we know probability theory is very useful and we know utility maximization is useful. But it gets tricky because sometimes you have beliefs that are provably true or false, like beliefs about math, right? For example, beliefs about the millionth digit of π. I mean, how can you even put a probability on the millionth digit of π?The probability of any particular digit is either 100% or 0%, ‘cause there’s only one definite digit. You could even prove it in principle. And yet, in real life you don’t know the millionth digit of π yet (you haven’t done the calculation), and so you could actually put a probability on it—and then you kind of get into a mess, ‘cause things that aren’t supposed to have probabilities can still have probabilities. How is that?Tsvi 00:04:16That seems right.Liron 00:04:18I think what I described might be—oh, I forgot what it’s called—like “deductive probability” or something. Like, how do you...Tsvi 00:04:22(interjecting) Uncertainty.Liron 00:04:23Logical uncertainty. So is reflective probability something else?Tsvi 00:04:26Yeah. If we want to get technical: logical uncertainty is this. Probability theory usually deals with some fact that I’m fundamentally unsure about (like I’m going to roll some dice; I don’t know what number will come up, but I still want to think about what’s likely or unlikely to happen). Usually probability theory assumes there’s some fundamental randomness or unknown in the universe.But then there’s this further question: you might actually already know enough to determine the answer to your question, at least in principle. For example, what’s the billionth digit of π—is the billionth digit even or odd? Well, I know a definition of π that determines the answer. Given the definition of π, you can compute out the digits, and eventually you’d get to the billionth one and you’d know if it’s even. But sitting here as a human, who doesn’t have a Python interpreter in his head, I can’t actually figure it out right now. I’m uncertain about this thing, even though I already know enough (in principle, logically speaking) to determine the answer. So that’s logical uncertainty—I’m uncertain about a logical fact.Tsvi 00:05:35Reflective probability is sort of a sharpening or a subset of that. Let’s say I’m asking, “What am I going to do tomorrow? Is my reasoning system flawed in such a way that I should make a correction to my own reasoning system?” If you want to think about that, you’re asking about a very, very complex object. I’m asking about myself (or my future self). And because I’m asking about such a complex object, I cannot compute exactly what the answer will be. I can’t just sit here and imagine every single future pathway I might take and then choose the best one or something—it’s computationally impossible. So it’s fundamentally required that you deal with a lot of logical uncertainty if you’re an agent in the world trying to reason about yourself.Liron 00:06:24Yeah, that makes sense. Technically, you have the computation, or it’s well-defined what you’re going to do, but realistically you don’t really know what you’re going to do yet. It’s going to take you time to figure it out, but you have to guess what you’re gonna do. So that kind of has the flavor of guessing the billionth digit of π. And it sounds like, sure, we all face that problem every day—but it’s not... whatever.Liron 00:06:43When you’re talking about superintelligence, right, these super-intelligent dudes are probably going to do this perfectly and rigorously. Right? Is that why it’s an interesting problem?Why Superintelligence is So Hard to Align: Self-ModificationTsvi 00:06:51That’s not necessarily why it’s interesting to me. I guess the reason it’s interesting to me is something like: there’s a sort of chaos, or like total incomprehensibility, that I perceive if I try to think about what a superintelligence is going to be like. It’s like we’re talking about something that is basically, by definition, more complex than I am. It understands more, it has all these rich concepts that I don’t even understand, and it has potentially forces in its mind that I also don’t understand.In general it’s just this question of: how do you get any

I’m excited to share my recent AI doom interview with Eben Pagan, better known to many by his pen name David DeAngelo!For an entire generation of men, ‘David DeAngelo’ was the authority on dating—and his work transformed my approach to courtship back in the day. Now the roles reverse, as I teach Eben about a very different game, one where the survival of our entire species is at stake.In this interview, we cover the expert consensus on AI extinction, my dead-simple two-question framework for understanding the threat, why there’s no “off switch” for superintelligence, and why we desperately need international coordination before it’s too late.Timestamps0:00 - Episode Preview1:05 - How Liron Got Doom-Pilled2:55 - Why There’s a 50% Chance of Doom by 20504:52 - What AI CEOs Actually Believe8:14 - What “Doom” Actually Means10:02 - The Next Species is Coming12:41 - The Baby Dragon Fallacy14:41 - The 2-Question Framework for AI Extinction18:38 - AI Doesn’t Need to Hate You to Kill You21:05 - “Computronium”: The End Game29:51 - 3 Reasons There’s No Superintelligence “Off Switch”36:22 - Answering ‘What Is Intelligence?”43:24 - We Need Global CoordinationShow NotesEben has become a world-class business trainer and someone who follows the AI discourse closely. I highly recommend subscribing to his podcast for excellent interviews & actionable AI tips: @METAMIND_AI---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Former Machine Intelligence Research Institute (MIRI) researcher Tsvi Benson-Tilsen is championing an audacious path to prevent AI doom: engineering smarter humans to tackle AI alignment.I consider this one of the few genuinely viable alignment solutions, and Tsvi is at the forefront of the effort. After seven years at MIRI, he co-founded the Berkeley Genomics Project to advance the human germline engineering approach.In this episode, Tsvi lays out how to lower P(doom), arguing we must stop AGI development and stigmatize it like gain-of-function virus research. We cover his AGI timelines, the mechanics of genomic intelligence enhancement, and whether super-babies can arrive fast enough to save us.I’ll be releasing my full interview with Tsvi in 3 parts. Stay tuned for part 2 next week!Timestamps0:00 Episode Preview & Introducing Tsvi Benson-Tilsen1:56 What’s Your P(Doom)™4:18 Tsvi’s AGI Timeline Prediction6:16 What’s Missing from Current AI Systems10:05 The State of AI Alignment Research: 0% Progress11:29 The Case for PauseAI 15:16 Debate on Shaming AGI Developers25:37 Why Human Germline Engineering31:37 Enhancing Intelligence: Chromosome Vs. Sperm Vs. Egg Selection37:58 Pushing the Limits: Head Size, Height, Etc.40:05 What About Human Cloning?43:24 The End-to-End Plan for Germline Engineering45:45 Will Germline Engineering Be Fast Enough?48:28 Outro: How to Support Tsvi’s WorkShow NotesTsvi’s organization, the Berkeley Genomics Project — https://berkeleygenomics.orgIf you’re interested to connect with Tsvi about germline engineering, you can reach out to him at BerkeleyGenomicsProject@gmail.com.---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Today I'm sharing my interview on Robert Wright's Nonzero Podcast where we unpack Eliezer Yudkowsky's AI doom arguments from his bestselling book, "If Anyone Builds It, Everyone Dies." Bob is an exceptionally thoughtful interviewer who asks sharp questions and pushes me to defend the Yudkowskian position, leading to a rich exploration of the AI doom perspective. I highly recommend getting a premium subscription to his podcast: 0:00 Episode Preview 2:43 Being a "Stochastic Parrot" for Eliezer Yudkowsky 5:38 Yudkowsky's Book: "If Anyone Builds It, Everyone Dies" 9:38 AI Has NEVER Been Aligned 12:46 Liron Explains "Intellidynamics" 15:05 Natural Selection Leads to Maladaptive Behaviors — AI Misalignment Foreshadowing 29:02 We Summon AI Without Knowing How to Tame It 32:03 The "First Try" Problem of AI Alignment 37:00 Headroom Above Human Capability 40:37 The PauseAI Movement: The Silent Majority 47:35 Going into Overtime Get full access to Doom Debates at lironshapira.substack.com/subscribe

Today I’m taking a rare break from AI doom to cover the dumbest kind of doom humanity has ever created for itself: climate change. We’re talking about a problem that costs less than $2 billion per year to solve. For context, that’s what the US spent on COVID relief every 7 hours during the pandemic. Bill Gates could literally solve this himself.My guest Andrew Song runs Make Sunsets, which launches weather balloons filled with sulfur dioxide (SO₂) into the stratosphere to reflect sunlight and cool the planet. It’s the same mechanism volcanoes use—Mount Pinatubo cooled Earth by 0.5°C for a year in 1991. The physics is solid, the cost is trivial, and the coordination problem is nonexistent.So why aren’t we doing it? Because people are squeamish about “playing God” with the atmosphere, even while we’re building superintelligent AI. Because environmentalists would rather scold you into turning off your lights than support a solution that actually works.This conversation changed how I think about climate change. I went from viewing it as this intractable coordination problem to realizing it’s basically already solved—we’re just LARPing that it’s hard! 🙈 If you care about orders of magnitude, this episode will blow your mind. And if you feel guilty about your carbon footprint: you can offset an entire year of typical American energy usage for about 15 cents. Yes, cents.Timestamps* 00:00:00 - Introducing Andrew Song, Cofounder of Make Sunsets* 00:03:08 - Why the company is called “Make Sunsets”* 00:06:16 - What’s Your P(Doom)™ From Climate Change* 00:10:24 - Explaining geoengineering and solar radiation management* 00:16:01 - The SO₂ dial we can turn* 00:22:00 - Where to get SO₂ (gas supply stores, sourcing from oil)* 00:28:44 - Cost calculation: Just $1-2 billion per year* 00:34:15 - “If everyone paid $3 per year”* 00:42:38 - Counterarguments: moral hazard, termination shock* 00:44:21 - Being an energy hog is totally fine* 00:52:16 - What motivated Andrew (his kids, Luke Iseman)* 00:59:09 - “The stupidest problem humanity has created”* 01:11:26 - Offsetting CO₂ from OpenAI’s Stargate* 01:13:38 - Playing God is goodShow NotesMake Sunsets* Website: https://makesunsets.com* Tax-deductible donations (US): https://givebutter.com/makesunsetsPeople Mentioned* Casey Handmer: https://caseyhandmer.wordpress.com/* Emmett Shear: https://twitter.com/eshear* Palmer Luckey: https://twitter.com/PalmerLuckeyResources Referenced* Book: Termination Shock by Neal Stephenson* Book: The Rational Optimist by Matt Ridley* Book: Enlightenment Now by Steven Pinker* Harvard SCoPEx project (the Bill Gates-funded project that got blocked)* Climeworks (direct air capture company): https://climeworks.comData/Monitoring* NOAA (National Oceanic and Atmospheric Administration): https://www.noaa.gov* ESA Sentinel-5P TROPOMI satellite data---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

I’ve been puzzled by David Deutsch’s AI claims for years. Today I finally had the chance to hash it out: Brett Hall, one of the foremost educators of David Deutsch’s ideas around epistemology & science, was brave enough to debate me!Brett has been immersed in Deutsch’s philosophy since 1997 and teaches it on his Theory of Knowledge podcast, which has been praised by tech luminary Naval Ravikant. He agrees with Deutsch on 99.99% of issues, especially the dismissal of AI as an existential threat.In this debate, I stress-test the Deutschian worldview, and along the way we unpack our diverging views on epistemology, the orthogonality thesis, and pessimism vs optimism.Timestamps0:00 — Debate preview & introducing Brett Hall4:24 — Brett’s opening statement on techno-optimism13:44 — What’s Your P(Doom)?™15:43 — We debate the merits of Bayesian probabilities20:13 — Would Brett sign the AI risk statement?24:44 — Liron declares his “damn good reason” for AI oversight35:54 — Debate milestone: We identify our crux of disagreement!37:29 — Prediction vs prophecy44:28 — The David Deutsch CAPTCHA challenge1:00:41 — What makes humans special?1:15:16 — Reacting to David Deutsch’s recent statements on AGI1:24:04 — Debating what makes humans special1:40:25 — Brett reacts to Roger Penrose’s AI claims1:48:13 — Debating the orthogonality thesis1:56:34 — The powerful AI data center hypothetical2:03:10 — “It is a dumb tool, easily thwarted”2:12:18 — Clash of worldviews: goal-driven vs problem-solving2:25:05 — Ideological Turing test: We summarize each other’s positions2:30:44 — Are doomers just pessimists?Show NotesBrett’s website — https://www.bretthall.orgBrett’s Twitter — https://x.com/TokTeacherThe Deutsch Files by Brett Hall and Naval Ravikant* https://nav.al/deutsch-files-i* https://nav.al/deutsch-files-ii* https://nav.al/deutsch-files-iii* https://nav.al/deutsch-files-ivBooks:* The Fabric of Reality by David Deutsch* The Beginning of Infinity by David Deutsch* Superintelligence by Nick Bostrom* If Anyone Builds It, Everyone Dies by Eliezer Yudkowsky---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

We took Eliezer Yudkowsky and Nate Soares’s new book, If Anyone Builds It, Everyone Dies, on the streets to see what regular people think.Do people think that artificial intelligence is a serious existential risk? Are they open to considering the argument before it’s too late? Are they hostile to the idea? Are they totally uninterested?Watch this episode to see the full spectrum of reactions from a representative slice of America!---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Welcome to the Doom Debates + Wes Roth + Dylan Curious crossover episode!Wes & Dylan’s host a popular YouTube AI news show that’s better than mainstream media. They interview thought leaders like Prof. Nick Bostrom, Dr. Mike Israetel — and now, yours truly!This episode is Part 2, where Wes & Dylan come on Doom Debates to break down the latest AI news.In Part 1, I went on Wes & Dylan’s channel to talk about my AI doom worries:The only reasonable move is to subscribe to both channels and watch both parts!Timestamps00:00 — Cold open00:45 — Introducing Wes & Dylan & hearing their AI YouTuber origin stories05:38 — What’s Your P(Doom)? ™10:30 — Living with high P(doom)12:10 — AI News Roundup: If Anyone Builds It, Everyone Dies Reactions17:02 — AI Redlines at the UN & risk of AGI authoritarianism26:56 — Robot ‘violence test’29:20 — Anthropic gets honest about job loss impact32:43 — AGI hunger strikes, the rationale for Anthropic protests41:24 — Liron explains his proposal for a safer future, treaty & enforcement debate49:23 — US Government officials ignore scientists and use “catastrophists” pejorative55:59 — Experts’ p(doom) predictions59:41 — Wes gives his take on AI safety warnings1:02:04 — Wrap-up, Subscribe to Wes Roth and Dylan CuriousShow NotesWes & Dylans’s channel — https://www.youtube.com/wesrothIf Anyone Builds It, Everyone Dies by Eliezer Yudkowsky and Nate Soares — https://ifanyonebuildsit.com---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

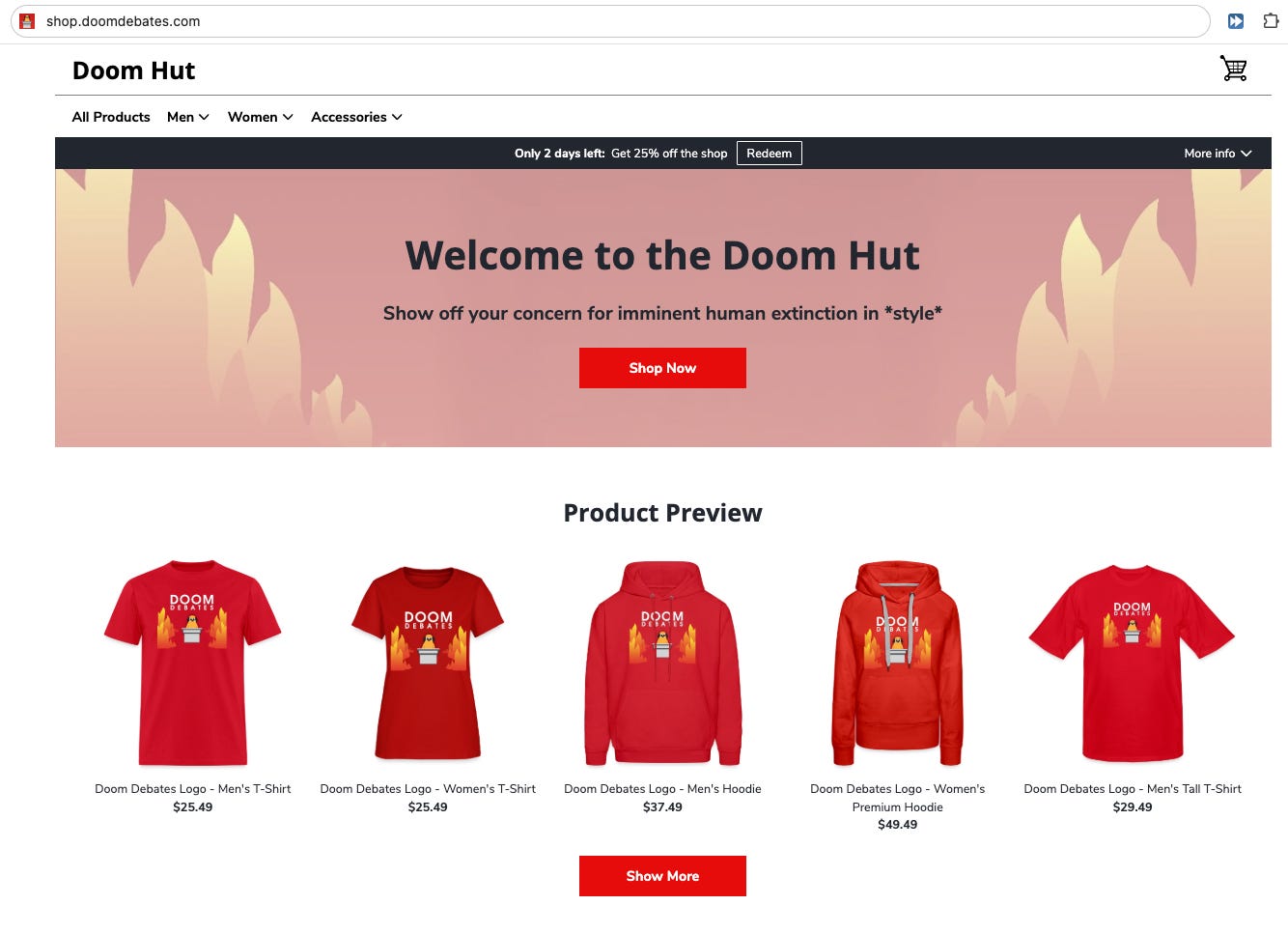

I’m excited to announce the launch of Doom Hut, the official Doom Debates merch store!This isn’t just another merch drop. It’s a way to spread an urgent message that humanity faces an imminent risk from superintelligent AI, and we need to build common knowledge about it now.What We’re OfferingThe Doom Hut is the premiere source of “high P(doom) fashion.” We’ve got t-shirts in men’s and women’s styles, tall tees, tote bags, and hats that keep both the sun and the doom out of your eyes.Our signature items are P(doom) pins. Whether your probability of doom is less than 10%, greater than 25%, or anywhere in between, you can represent your assessment with pride.Our shirts feature “Doom Debates” on the front and “What’s your P(doom)?” on the back. It’s a conversation starter that invites questions rather than putting people on the defensive.Why This MattersThis is crunch time. There’s no more time to beat around the bush or pretend everything’s okay.When you wear this merch, you’re not just making a fashion statement. You’re signaling that P(doom) is uncomfortably high and that we need to stop building dangerous AI before we know how to control it.People will wonder what “Doom Debates” means. They’ll see that you’re bold enough to acknowledge the world faces real threats. Maybe they’ll follow your example. Maybe they’ll start asking questions and learning more.Supporting the MissionThe merch store isn’t really about making money for us (we make about $2 per item sold). The show’s production and marketing costs are funded by donations from viewers like you. Visit doomdebates.com/donate to learn more.Donations to Doom Debates are fully tax-deductible through our fiscal sponsor, Manifund.org. You can also support us by becoming a premium subscriber to DoomDebates.com, which helps boost our Substack rankings and visibility.Join the CommunityJoin our Discord community here! It’s a vibrant space where people debate, share memes, discuss new episodes, and talk about where they “get off the doom train.”Moving Forward TogetherThe outpouring of support since we started accepting donations has been incredible. You get what we’re trying to do here—raising awareness and helping the average person understand that AI risk is an imminent threat to them and their loved ones.This is real. This is urgent. And together, we’re moving the Overton window. Thanks for your support. Get full access to Doom Debates at lironshapira.substack.com/subscribe

Eliezer Yudkowsky can warn humankind that If Anyone Builds It, Everyone Dies and get on the New York Times bestseller list, but he won’t get upvoted to the top of LessWrong.According to the leaders of LessWrong, that’s intentional. The rationalist community thinks aggregating community support for important claims is “political fighting”.Unfortunately, the idea that some other community will strongly rally behind Eliezer Yudkowsky’s message while LessWrong “stays out of the fray” and purposely prevents mutual knowledge of support from being displayed, is unrealistic.Our refusal to aggregate the rationalist community beliefs into signals and actions is why we live in a world where rationalists with double-digit P(Doom)s join AI-race companies instead of AI-pause movements.We let our community become a circular firing squad. What did we expect?Timestamps00:00:00 — Cold Open00:00:32 — Introducing Holly Elmore, Exec. Director of PauseAI US00:03:12 — “If Anyone Builds It, Everyone Dies”00:10:07 — What’s Your P(Doom)™00:12:55 — Liron’s Review of IABIED00:15:29 — Encouraging Early Joiners to a Movement00:26:30 — MIRI’s Communication Issues00:33:52 — Government Officials’ Reviews of IABIED00:40:33 — Emmett Shear’s Review of IABIED00:42:47 — Michael Nielsen’s Review of IABIED00:45:35 — New York Times Review of IABIED00:49:56 — Will MacAskill’s Review of IABIED01:11:49 — Clara Collier’s Review of IABIED01:22:17 — Vox Article Review01:28:08 — The Circular Firing Squad01:37:02 — Why Our Kind Can’t Cooperate01:49:56 — LessWrong’s Lukewarm Show of Support02:02:06 — The “Missing Mood” of Support02:16:13 — Liron’s “Statement of Support for IABIED”02:18:49 — LessWrong Community’s Reactions to the Statement02:29:47 — Liron & Holly’s Hopes for the Community02:39:01 — Call to ActionSHOW NOTESPauseAI US — https://pauseai-us.orgPauseAI US Upcoming Events — https://pauseai-us.org/eventsInternational PauseAI — https://pauseai.infoHolly’s Twitter — https://x.com/ilex_ulmusReferenced Essays & Posts* Liron’s Eliezer Yudkowsky interview post on LessWrong — https://www.lesswrong.com/posts/kiNbFKcKoNQKdgTp8/interview-with-eliezer-yudkowsky-on-rationality-and* Liron’s “Statement of Support for If Anyone Builds It, Everyone Dies” — https://www.lesswrong.com/posts/aPi4HYA9ZtHKo6h8N/statement-of-support-for-if-anyone-builds-it-everyone-dies* “Why Our Kind Can’t Cooperate” by Eliezer Yudkowsky (2009) — https://www.lesswrong.com/posts/7FzD7pNm9X68Gp5ZC/why-our-kind-can-t-cooperate* “Something to Protect” by Eliezer Yudkowsky — https://www.lesswrong.com/posts/SGR4GxFK7KmW7ckCB/something-to-protect* Center for AI Safety Statement on AI Risk — https://safe.ai/work/statement-on-ai-riskOTHER RESOURCES MENTIONED* Stephen Pinker’s new book on mutual knowledge, When Everyone Knows That Everyone Knows... — https://stevenpinker.com/publications/when-everyone-knows-everyone-knows-common-knowledge-and-mysteries-money-power-and* Scott Alexander’s “Ethnic Tension and Meaningless Arguments” — https://slatestarcodex.com/2014/11/04/ethnic-tension-and-meaningless-arguments/PREVIOUS EPISODES REFERENCEDHolly’s previous Doom Debates appearance debating the California SB 1047 bill — https://www.youtube.com/watch?v=xUP3GywD0yMLiron’s interview with Eliezer Yudkowsky about the IABI launch — https://www.youtube.com/watch?v=wQtpSQmMNP0---Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏 Get full access to Doom Debates at lironshapira.substack.com/subscribe

Ex-Twitch Founder and OpenAI Interim CEO Emmett Shear is one of the rare established tech leaders to lend his name and credibility to Eliezer Yudkowsky’s warnings about AI existential risk.Even though he disagrees on some points, he chose to endorse the new book If Anyone Builds It, Everyone Dies:“Soares and Yudkowsky lay out, in plain and easy-to-follow terms, why our current path toward ever-more-powerful AIs is extremely dangerous.”In this interview from the IABED launch party, we dive into Emmett’s endorsement, why the current path is so dangerous, and what he hopes to achieve by taking a different approach at his new startup, Softmax.Watch the full IABED livestream here: https://www.youtube.com/watch?v=lRITRf-jH1gWatch my reaction to Emmett’s talk about Softmax to see why I’m not convinced his preferred alignment track is likely to work: https://www.youtube.com/watch?v=CBN1E1fvh2g Get full access to Doom Debates at lironshapira.substack.com/subscribe