Discover ThursdAI - The top AI news from the past week

ThursdAI - The top AI news from the past week

ThursdAI - The top AI news from the past week

Author: From Weights & Biases, Join AI Evangelist Alex Volkov and a panel of experts to cover everything important that happened in the world of AI from the past week

Subscribed: 38Played: 1,190Subscribe

Share

© Alex Volkov

Description

Every ThursdAI, Alex Volkov hosts a panel of experts, ai engineers, data scientists and prompt spellcasters on twitter spaces, as we discuss everything major and important that happened in the world of AI for the past week.

Topics include LLMs, Open source, New capabilities, OpenAI, competitors in AI space, new LLM models, AI art and diffusion aspects and much more.

sub.thursdai.news

Topics include LLMs, Open source, New capabilities, OpenAI, competitors in AI space, new LLM models, AI art and diffusion aspects and much more.

sub.thursdai.news

125 Episodes

Reverse

Hey folks, Alex here. Can you believe it’s already the middle of October? This week’s show was a special one, not just because of the mind-blowing news, but because we set a new ThursdAI record with four incredible interviews back-to-back!We had Jessica Gallegos from Google DeepMind walking us through the cinematic new features in VEO 3.1. Then we dove deep into the world of Reinforcement Learning with my new colleague Kyle Corbitt from OpenPipe. We got the scoop on Amp’s wild new ad-supported free tier from CEO Quinn Slack. And just as we were wrapping up, Swyx ( from Latent.Space , now with Cognition!) jumped on to break the news about their blazingly fast SWE-grep models. But the biggest story? An AI model from Google and Yale made a novel scientific discovery about cancer cells that was then validated in a lab. This is it, folks. This is the “let’s f*****g go” moment we’ve been waiting for. So buckle up, because this week was an absolute monster. Let’s dive in!ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.Open Source: An AI Model Just Made a Real-World Cancer DiscoveryWe always start with open source, but this week felt different. This week, open source AI stepped out of the benchmarks and into the biology lab.Our friends at Qwen kicked things off with new 3B and 8B parameter versions of their Qwen3-VL vision model. It’s always great to see powerful models shrink down to sizes that can run on-device. What’s wild is that these small models are outperforming last generation’s giants, like the 72B Qwen2.5-VL, on a whole suite of benchmarks. The 8B model scores a 33.9 on OS World, which is incredible for an on-device agent that can actually see and click things on your screen. For comparison, that’s getting close to what we saw from Sonnet 3.7 just a few months ago. The pace is just relentless.But then, Google dropped a bombshell. A 27-billion parameter Gemma-based model they developed with Yale, called C2S-Scale, generated a completely novel hypothesis about how cancer cells behave. This wasn’t a summary of existing research; it was a new idea, something no human scientist had documented before. And here’s the kicker: researchers then took that hypothesis into a wet lab, tested it on living cells, and proved it was true.This is a monumental deal. For years, AI skeptics like Gary Marcus have said that LLMs are just stochastic parrots, that they can’t create genuinely new knowledge. This feels like the first, powerful counter-argument. Friend of the pod, Dr. Derya Unutmaz, has been on the show before saying AI is going to solve cancer, and this is the first real sign that he might be right. The researchers noted this was an “emergent capability of scale,” proving once again that as these models get bigger and are trained on more complex data—in this case, turning single-cell RNA sequences into “sentences” for the model to learn from—they unlock completely new abilities. This is AI as a true scientific collaborator. Absolutely incredible.Big Companies & APIsThe big companies weren’t sleeping this week, either. The agentic AI race is heating up, and we’re seeing huge updates across the board.Claude Haiku 4.5: Fast, Cheap Model Rivals Sonnet 4 Accuracy (X, Official blog, X)First up, Anthropic released Claude Haiku 4.5, and it is a beast. It’s a fast, cheap model that’s punching way above its weight. On the SWE-bench verified benchmark for coding, it hit 73.3%, putting it right up there with giants like GPT-5 Codex, but at a fraction of the cost and twice the speed of previous Claude models. Nisten has already been putting it through its paces and loves it for agentic workflows because it just follows instructions without getting opinionated. It seems like Anthropic has specifically tuned this one to be a workhorse for agents, and it absolutely delivers. The thing to note also is the very impressive jump in OSWorld (50.7%), which is a computer use benchmark, and at this price and speed ($1/$5 MTok input/output) is going to make computer agents much more streamlined and speedy! ChatGPT will loose restrictions; age-gating enables “adult mode” with new personality features coming (X) Sam Altman set X on fire with a thread announcing that ChatGPT will start loosening its restrictions. They’re planning to roll out an “adult mode” in December for age-verified users, potentially allowing for things like erotica. More importantly, they’re bringing back more customizable personalities, trying to recapture some of the magic of GPT-4.0 that so many people missed. It feels like they’re finally ready to treat adults like adults, letting us opt-in to R-rated conversations while keeping strong guardrails for minors. This is a welcome change, and we’ve been advocating for this for a while, and it’s a notable change from the XAI approach I covered last week. Opt in for adults with verification while taking precautions vs engagement bait in the form of a flirty animated waifu with engagement mechanics. Microsoft is making every windows 11 an AI PC with copilot voice input and agentic powers (Blog,X)And in breaking news from this morning, Microsoft announced that every Windows 11 machine is becoming an AI PC. They’re building a new Copilot agent directly into the OS that can take over and complete tasks for you. The really clever part? It runs in a secure, sandboxed desktop environment that you can watch and interact with. This solves a huge problem with agents that take over your mouse and keyboard, locking you out of your own computer. Now, you can give the agent a task and let it run in the background while you keep working. This is going to put agentic AI in front of hundreds of millions of users, and it’s a massive step towards making AI a true collaborator at the OS level.NVIDIA DGX - the tiny personal supercomputer at $4K (X, LMSYS Blog)NVIDIA finally delivered their promised AI Supercomputer, and while the excitement was in the air with Jensen hand delivering the DGX Spark to OpenAI and Elon (recreating that historical picture when Jensen hand delivered a signed DGX workstation while Elon was still affiliated with OpenAI). The workstation was sold out almost immediately. Folks from LMSys did a great deep dive into specs, all the while, folks on our feeds are saying that if you want to get the maximum possible open source LLMs inference speed, this machine is probably overpriced, compared to what you can get with an M3 Ultra Macbook with 128GB of RAM or the RTX 5090 GPU which can get you similar if not better speeds at significantly lower price points. Anthropic’s “Claude Skills”: Your AI Agent Finally Gets a Playbook (Blog)Just when we thought the week couldn’t get any more packed, Anthropic dropped “Claude Skills,” a huge upgrade that lets you give your agent custom instructions and workflows. Think of them as expertise folders you can create for specific tasks. For example, you can teach Claude your personal coding style, how to format reports for your company, or even give it a script to follow for complex data analysis.The best part is that Claude automatically detects which “Skill” is needed for a given task, so you don’t have to manually load them. This is a massive step towards making agents more reliable and personalized, moving beyond just a single custom instruction and into a library of repeatable, expert processes. It’s available now for all paid users, and it’s a feature I’ve been waiting for. Our friend Simon Willison things skills may be a bigger deal than MCPs! 🎬 Vision & Video: Veo 3.1, Sora Gets Longer, and Real-Time WorldsThe AI video space is exploding. We started with an amazing interview with Jessica Gallegos, a Senior Product Manager at Google DeepMind, all about the new Veo 3.1. This is a significant 0.1 update, not a whole new model, but the new features are game-changers for creators.The audio quality is way better, and they’ve massively improved video extensions. The model now conditions on the last second of a clip—including the audio. This means if you extend a video of someone talking, they keep talking in the same voice! This is huge, saving creators from complex lip-syncing and dubbing workflows. They also added object insertion and removal, which works on both generated and real-world video. Jessica shared an incredible story about working with director Darren Aronofsky to insert a virtual baby into a live-action film shot, something that’s ethically and practically very difficult to do on a real set. These are professional-grade tools that are becoming accessible to everyone. Definitely worth listening to the whole interview with Jessica, starting at 00:25:44I’ve played with the new VEO in Google Flow, and while I was somewhat (still) disappointed with the UI itself (it froze sometimes and didn’t play). I wasn’t able to upload my own videos to use the insert/remove features Jessica mentioned yet, but saw examples online and they looked great! Ingredients were also improved with VEO 3.1, where you can add up to 3 references, and they will be included in your video but not as first frame, the model will use them to condition the vidoe generation. Jessica clarified that if you upload sound, as in, your voice, it won’t show up in the model as your voice yet, but maybe they will add this in the future (at least this was my feedback to her). SORA 2 extends video gen to 15s for all, 25 seconds to pro users with a new storyboard Not to be outdone, OpenAI pushed a bit of an update for Sora. All users can now generate up to 15-second clips (up from 8-10), and Pro users can go up to 25 seconds using a new storyboard feature. I’ve been playing with it, and while the new scene-based workflow is powerful, I’ve noticed the quality can start to degrade significantly in the final seconds of a longer generation (posted my experiments here) as you can see. The last few shot so

Hey everyone, Alex here 👋We’re deep in the post-reality era now. Between Sora2, the latest waves of video models, and “is-that-person-real” cameos, it’s getting genuinely hard to trust what we see. Case in point: I recorded a short clip with (the real) Sam Altman this week and a bunch of friends thought I faked it with Sora-style tooling. Someone even added a fake Sora watermark just to mess with people. Welcome to 2025.This week’s episode and this write-up focus on a few big arcs we’re all living through at once: OpenAI’s Dev Day and the beginning of the agent-app platform inside ChatGPT, a bizarre and exciting split-screen in model scaling where a 7M recursive model from Samsung is suddenly competitive on reasoning puzzles while inclusionAI is shipping a trillion-parameter mixture-of-reasoners, and Grok’s image-to-video now does audio and pushes the line on… taste. We also dove into practical evals for coding agents with Eric Provencher from Repo Prompt, and I’ve got big news from my day job world: W&B + CoreWeave launched Serverless RL, so training and deploying RL agents at scale is now one API call away.Let’s get into it.OpenAI’s 3rd Dev Day - Live Coverage + exclusive interviewsThis is the third Dev Day that I got to attend in person, covering this for ThursdAI (2023, 2024), and this one was the best by far! The production quality of their events rises every year, and this year they’ve opened up the conference to >1500 people, had 3 main launches and a lot of ways to interact with the OpenAI folks! I’ve also gotten an exclusive chance to sit in on a fireside chat with Sam Altman and Greg Brokman (snippets of which I’ve included in the podcast, starting 01:15:00 and I got to ask Sam a few questions after that as well. Event Ambiance and VibesOpenAI folks outdid themselves with this event, the live demos were quite incredible, the location (Fort Mason), Food and just the whole thing was on point. The event concluded with a 1x1 Sam and Jony Ive chat that I hope will be published on YT sometime, because it was very insightful. By far the best reason to go to this event in person is meeting folks and networking, both OpenAI employees, and AI Engineers who use their products. It’s 1 day a year, when OpenAI makes all their employees who attend, Developer Experience folks, as you can and are encouraged to, interact with them, ask questions, give feedback and it’s honestly great! I really enjoy meeting folks at this event and consider this to be a very high signal network, and was honored to have quite a few ThursdAI listeners among the participants and OpenAI folk! If you’re reading this, thank you for your patronage 🫡 Launches and ShipsOpenAI also shipped, and shipped a LOT! Sam was up on Keynote with 3 main pillars, which we’ll break down 1 by 1. ChatGPT Apps, AgentKit (+ agent builder) and Codex/New APIsCodex & New APIsCodex has gotten General Availability, but we’ve been using it all this time so we don’t really care, what we do care about is the new slack integration and the new Codex SDK, which means you can now directly inject Codex agency into your app. This flew a bit over people’s heads, but Romain Huet, VP of DevEx at OpenAI demoed on stage how his mobile app now has a Codex tab, where he can ask Codex to make changes to the app at runtime! It was quite crazy! ChatGPT Apps + AppsSDKThis was maybe the most visual and most surprising release, since they’ve tried to be an appstore before (plugins, customGPTs). But this time, it seems like, based on top of MCP, ChatGPT is going to become a full blown Appstore for 800+ million weekly active ChatGPT users as well. Some of the examples they have showed included Spotify and Zillow, where just by typing in “Spotify” in chatGPT, you will have an interactive app with it’s own UI, right inside of ChatGPT. So you could ask it to create a playlist for you based on your history, or ask Zillow to find homes in an area under a certain $$ amount.The most impressive thing, is that those are only launch partners, everyone can (technically) build a ChatGPT app with their AppsSDK that’s built on top of... the MCP (model context protocol) spec! The main question remains about discoverability, this is where Plugins and CustomGPTs (previous attempts to create apps within ChatGPT have failed), and when I asked him about it, Sam basically said “we’ll iterate and get it right” (starting 01:17:00). So it remains to be seen if folks really need their ChatGPT as yet another Appstore. AgentKit, AgentBuilder and ChatKit2025 is the year of agents, and besides launching quite a few of their own, OpenAI will not let you, build and host smart agents that can use tools, on their platform. Supposedly, with AgentBuilder, building agents is just dragging a few nodes around, prompting and connecting them. They had a great demo on stage where with less than 8 minutes, they’ve build an agent to interact with the DevDay website.It’s also great to see how greatly does OpenAI adapt the MCP spec, as this too, is powered by MCP, as in, any external connection you want to give your agent, must happen with an MCP server. Agents for the masses is maybe not quite there yetIn reality though, things are not so easy. Agents require more than just a nice drag & drop interface, they require knowledge, iteration, constant evaluation (which they’ve also added, kudos!) and eventually, customized agents need code. I spent an hour trying it out yesterday, building an agent to search the ThursdAI archives. The experience was a mixed bag. The AI-native features are incredibly cool. For instance, you can just describe the JSON schema you want as an output, and it generates it for you. The widget builder is also impressive, allowing you to create custom UI components for your agent’s responses.However, I also ran into the harsh realities of agent building. My agent’s web browsing tool failed because Substack seems to be blocking OpenAI’s crawlers, forcing me to fall back on the old-school RAG approach of uploading our entire archive to a vector store. And while the built-in evaluation and tracing tools are a great idea, they were buggy and failed to help me debug the error. It’s a powerful tool, but it also highlights that building a reliable agent is an iterative, often frustrating process that a nice UI alone can’t solve. It’s not just about the infrastructure; it’s about wrestling with a stochastic machine until it behaves.But to get started with something simple, they have definitely pushed the envelope on what is possible without coding. OpenAI also dropped a few key API updates:* GPT-5-Pro is now available via API. It’s incredibly powerful but also incredibly expensive. As Eric mentioned, you’re not going to be running agentic loops with it, but it’s perfect for a high-stakes initial planning step where you need an “expert opinion.”* SORA 2 is also in the API, allowing developers to integrate their state-of-the-art video generation model into their own apps. The API can access the 15-second “Pro” model but doesn’t support the “Cameo” feature for now.* Realtime-mini is a game-changer for voice AI. It’s a new, ultra-fast speech-to-speech model that’s 80% cheaper than the original Realtime API. This massive price drop removes one of the biggest barriers to building truly conversational, low-latency voice agents.My Chat with Sam & Greg - On Power, Responsibility, and EnergyAfter the announcements, I’ve got to sit in a fireside chat with Sam Altman and Greg Brockman and ask some questions. Here’s what stood out:When I asked about the energy requirements for their massive compute plans (remember the $500B Stargate deal?), Sam said they’d have announcements about Helion (his fusion investment) soon but weren’t ready to talk about it. Then someone from Semi Analysis told me most power will come from... generator trucks. 15-megawatt generator trucks that just drive up to data centers. We’re literally going to power AGI with diesel trucks!On responsibility, when I brought up the glazing incident and asked how they deal with being in the lives of 800+ million people weekly, Sam’s response was sobering: “This is not the excitement of ‘oh we’re building something important.’ This is just the stress of the responsibility... The fact that 10% of the world is talking to kind of one brain is a strange thing and there’s a lot of responsibility.”Greg added something profound: “AI is far more surprising than I anticipated... The deep nuance of how these problems contact reality is something that I think no one had anticipated.”This Week’s Buzz: RL X-mas came early with Serverless RL! (X, Blog)Big news from our side of the world! About a month ago, the incredible OpenPipe team joined us at Weights & Biases and CoreWeave. They are absolute wizards when it comes to fine-tuning and Reinforcement Learning (RL), and they wasted no time combining their expertise with CoreWeave’s massive infrastructure.This week, they launched Serverless RL, a managed reinforcement learning service that completely abstracts away the infrastructure nightmare that usually comes with RL. It automatically scales your training and inference compute, integrates with W&B Inference for instant deployment, and simplifies the creation of reward functions and verifiers. RL is what turns a good model into a great model for a specific task, often with surprisingly little data. This new service massively lowers the barrier to entry, and I’m so excited to see what people build with it. We’ll be doing a deeper dive on this soon but please check out the Colab Notebook to get a taste of what AutoRL is like! Open SourceWhile OpenAI was holding its big event, the open-source community was busy dropping bombshells of its own.Samsung’s TRM: Is This 7M Parameter Model... Magic? (X, Blog, arXiv)This was the release that had everyone’s jaws on the floor. A single researcher from the Samsung AI Lab in Montreal released a paper on a Tiny Recursive Model (TRM). Get this: it’s a 7

Hey everyone, Alex here (yes the real me if you’re reading this) The weeks are getting crazier, but what OpenAI pulled this week, with a whole new social media app attached to their latest AI breakthroughs is definitely breathtaking! Sora2 released and instantly became a viral sensation, shooting to the top 3 free iOS spot on AppStore, with millions of videos watched, and remixed. On weeks like these, even huge releases like Claude 4.5 are taking the backseat, but we still covered them! For listeners of the pod, the second half of the show was very visual heavy, so it may be worth it watching the YT video attached in a comment if you want to fully experience the Sora revolution with us! (and if you want a SORA invite but don’t have one yet, more on that below) ThursdAI - if you find this valuable, please support us by subscribing! Sora 2 - the AI video model that signifies a new era of social mediaLook, you’ve probably already heard about the SORA-2 release, but in case you haven’t, OpenAI released a whole new model, but attached it to a new, AI powered social media experiment in the form of a very addictive TikTok style feed. Besides being hyper-realistic, and producing sounds and true to source voice-overs, Sora2 asks you to create your own “Cameo” by taking a quick video, and then allows you to be featured in your own (and your friends) videos. This makes a significant break from the previously “slop” based meta Vibes, becuase, well, everyone loves seeing themselves as the stars of the show! Cameos are a stroke of genius, and what’s more, one can allow everyone to use their Cameo, which is what Sam Altman did at launch, making everyone Cameo him, and turning him, almost instantly into one of the most meme-able (and approachable) people on the planet! Sam sharing away his likeness like this for the sake of the app achieved a few things, it added trust in the safety features, made it instantly viral and showed folks they shouldn’t be afraid of adding their own likeness. Vibes based feed and remixingSora 2 is also unique in that, it’s the first social media with UGC (user generated content) where content can ONLY be generated, and all SORA content is created within the app. It’s not possible to upload pictures that have people to create the posts, and you can only create posts with other folks if you have access to their Cameos, or by Remixing existing creations. Remixing is also a way to let users “participate” in the creation process, by adding their own twist and vibes! Speaking of Vibes, while the SORA app has an algorithmic For You page, they have a completely novel and new way to interact with the algorithm, by using their Pick a Mood feature, where you can describe which type of content you want to see, or not see, with natural language! I believe that this feature will come to all social media platforms later, as it’s such a game changer. Want only content in a specific language? or content that doesn’t have Sam Altman in it? Just ask! Content that makes you feel goodThe most interesting thing is about the type of content is, there’s no sexualisation (because all content is moderated by OpenAI strong filters), and no gore etc. OpenAI has clearly been thinking about teenagers and have added parent controls, things like being able to turn of the For You page completely etc to the mix. Additionally, SORA seems to be a very funny model, and I mean this literally. You can ask the video generation for a joke and you’ll often get a funny one. The scene setup, the dialogue, the things it does even unprompted are genuinely entertaining. AI + Product = Profit? OpenAI shows that they are one of the worlds best product labs in the world, not just a foundational AI lab. Most AI advancements are tied to products, and in this case, the whole experience is so polished, it’s hard to accept that it’s a brand new app from a company that didn’t do social before. There’s very little buggy behavior, videos are loaded up quick, there’s even DMs! I’m thoroughly impressed and am immersing myself in the SORA sphere. Please give me a follow there and feel free to use my Cameo by tagging @altryne in there. I love seeing how folks have used my Cameo, it makes me laugh 😂 The copyright question is.. wildRemember last year when I asked Sam why Advanced Voice Mode couldn’t sing Happy Birthday? He said they didn’t have classifiers to detect IP violations. Well, apparently that’s not a concern anymore because SORA 2 will happily generate perfect South Park episodes, Rick and Morty scenes, and Pokemon battles. They’re not even pretending they didn’t train on this stuff. You can even generate videos with any dead famous person (I’ve had zoom meetings with Michael Jackson and 2Pac, JFK and Mister Rogers) Our friend Ryan Carson already used it to create a YouTube short ad for his startup in two minutes. What would have cost $100K and three months now takes six generations and you’re done. This is the real game-changer for businesses.Getting invitedEDIT: If you’re reading this on Friday, try the code `FRIYAY` and let me know in comments if it worked for you 🙏I wish I would have invites for all of you, but all invited users have 4 other folks they can invite, so we shared a bunch of invites during the live show, and asked folks to come back and invite other listeners, this went on for half an hour so I bet we’ve got quite a few of you in! If you’re still looking for an invite, you can visit the thread on X and see who claimed and invite and ask them for one, tell them you’re also a ThursdAI listener, they hopefully will return the favor! Alternatively, OpenAI employees often post codes with a huge invite ratio, so follow @GabrielPeterss4 who often posts codes and you can get in there fairly quick, and if you’re not in the US, I heard a VPN works well. Just don’t forget to follow me on there as well 😉A Week with OpenAI Pulse: The Real Agentic Future is HereListen to me, this may be a hot take. I think OpenAI Pulse is a bigger news story than Sora. I’ve told you about Pulse last week, but today on the show I was able to share my weeks worth of experience, and honestly, it’s now the first thing I look at when I wake up in the morning after brushing my teeth! While Sora is changing media, Pulse is changing how we interact with AI on a fundamental level. Released to Pro subscribers for now, Pulse is an agentic, personalized feed that works for you behind the scenes. Every morning, it delivers a briefing based on your interests, your past conversations, your calendar—everything. It’s the first asynchronous AI agent I’ve used that feels truly proactive.You don’t have to trigger it. It just works. It knew I had a flight to Atlanta and gave me tips. I told it I was interested in Halloween ideas for my kids, and now it’s feeding me suggestions. Most impressively, this week it surfaced a new open-source video model, Kandinsky 5.0, that I hadn’t seen anywhere on X or my usual news feeds. An agent found something new and relevant for my show, without me even asking.This is it. This is the life-changing-level of helpfulness we’ve all been waiting for from AI. Personalized, proactive agents are the future, and Pulse is the first taste of it that feels real. I cannot wait for my next Pulse every morning.This Week’s Buzz: The AI Build-Out is NOT a BubbleThis show is powered by Weights & Biases from CoreWeave, and this week that’s more relevant than ever. I just got back from a company-wide offsite where we got a glimpse into the future of AI infrastructure, and folks, the scale is mind-boggling.CoreWeave, our parent company, is one of the key players providing the GPU infrastructure that powers companies like OpenAI and Meta. And the commitments being made are astronomical. In the past few months, CoreWeave has locked in a $22.4B deal with OpenAI, a $14.2B pact with Meta, and a $6.3B “backstop” guarantee with NVIDIA that runs through 2032.If you hear anyone talking about an “AI bubble,” show them these numbers. These are multi-year, multi-billion dollar commitments to build the foundational compute layer for the next decade of AI. The demand is real, and it’s accelerating. And the best part? As a Weights & Biases user, you have access to this same best-in-class infrastructure that runs OpenAI through our inference services. Try wandb.me/inference, and let me know if you need a bit of a credit boost! Claude Sonnet 4.5: The New Coding King Has a Few QuirksOn any other week, Anthropic’s release of Claude Sonnet 4.5 would’ve been the headline news. They’re positioning it as the new best model for coding and complex agents, and the benchmarks are seriously impressive. It matches or beats their previous top-tier model, Opus 4.1, on many difficult evals, all while keeping the same affordable price as the previous Sonnet.One of the most significant jumps is on the OS World benchmark, which tests an agent’s ability to use a computer—opening files, manipulating windows, and interacting with applications. Sonnet 4.5 scored a whopping 61.4%, a massive leap from Opus 4.1’s 44%. This clearly signals that Anthropic is doubling down on building agents that can act as real digital assistants.However, the real-world experience has been a bit of a mixed bag. My co-host Ryan Carson, whose company Amp switched over to 4.5 right away, noted some regressions and strange errors, saying they’re even considering switching back to the previous version until the rough edges are smoothed out. Nisten also found it could be more susceptible to “slop catalysts” in prompting. It seems that while it’s incredibly powerful, it might require some re-prompting and adjustments to get the best, most stable results. The jury’s still out, but it’s a potent new tool in the developer’s arsenal.Open Source LLMs: DeepSeek’s Attention RevolutionDespite the massive news from the big companies, open source still brought the heat this week, with one release in particular representing a fundamental breakthro

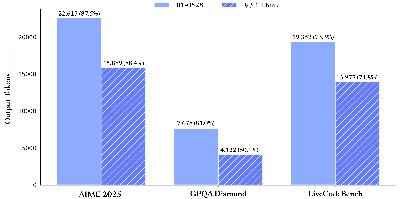

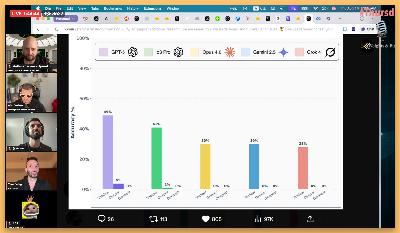

This is a free preview of a paid episode. To hear more, visit sub.thursdai.newsHola AI aficionados, it’s yet another ThursdAI, and yet another week FULL of AI news, spanning Open Source LLMs, Multimodal video and audio creation and more! Shiptember as they call it does seem to deliver, and it was hard even for me to follow up on all the news, not to mention we had like 3-4 breaking news during the show today! This week was yet another Qwen-mas, with Alibaba absolutely dominating across open source, but also NVIDIA promising to invest up to $100 Billion into OpenAI. So let’s dive right in! As a reminder, all the show notes are posted at the end of the article for your convenience. ThursdAI - Because weeks are getting denser, but we’re still here, weekly, sending you the top AI content! Don’t miss outTable of Contents* Open Source AI* Qwen3-VL Announcement (Qwen3-VL-235B-A22B-Thinking):* Qwen3-Omni-30B-A3B: end-to-end SOTA omni-modal AI unifying text, image, audio, and video* DeepSeek V3.1 Terminus: a surgical bugfix that matters for agents* Evals & Benchmarks: agents, deception, and code at scale* Big Companies, Bigger Bets!* OpenAI: ChatGPT Pulse: Proactive AI news cards for your day* XAI Grok 4 fast - 2M context, 40% fewer thinking tokens, shockingly cheap* Alibaba Qwen-Max and plans for scaling* This Week’s Buzz: W&B Fully Connected is coming to London and Tokyo & Another hackathon in SF* Vision & Video: Wan 2.2 Animate, Kling 2.5, and Wan 4.5 preview* Moondream-3 Preview - Interview with co-founders Via & Jay* Wan open sourced Wan 2.2 Animate (aka “Wan Animate”): motion transfer and lip sync* Kling 2.5 Turbo: cinematic motion, cheaper and with audio* Wan 4.5 preview: native multimodality, 1080p 10s, and lip-synced speech* Voice & Audio* ThursdAI - Sep 25, 2025 - TL;DR & Show notesOpen Source AIThis was a Qwen-and-friends week. I joked on stream that I should just count how many times “Alibaba” appears in our show notes. It’s a lot.Qwen3-VL Announcement (Qwen3-VL-235B-A22B-Thinking): (X, HF, Blog, Demo)Qwen 3 launched earlier as a text-only family; the vision-enabled variant just arrived, and it’s not timid. The “thinking” version is effectively a reasoner with eyes, built on a 235B-parameter backbone with around 22B active (their mixture-of-experts trick). What jumped out is the breadth of evaluation coverage: MMU, video understanding (Video-MME, LVBench), 2D/3D grounding, doc VQA, chart/table reasoning—pages of it. They’re showing wins against models like Gemini 2.5 Pro and GPT‑5 on some of those reports, and doc VQA is flirting with “nearly solved” territory in their numbers.Two caveats. First, whenever scores get that high on imperfect benchmarks, you should expect healthy skepticism; known label issues can inflate numbers. Second, the model is big. Incredible for server-side grounding and long-form reasoning with vision (they’re talking about scaling context to 1M tokens for two-hour video and long PDFs), but not something you throw on a phone.Still, if your workload smells like “reasoning + grounding + long context,” Qwen 3 VL looks like one of the strongest open-weight choices right now.Qwen3-Omni-30B-A3B: end-to-end SOTA omni-modal AI unifying text, image, audio, and video (HF, GitHub, Qwen Chat, Demo, API)Omni is their end-to-end multimodal chat model that unites text, image, and audio—and crucially, it streams audio responses in real time while thinking separately in the background. Architecturally, it’s a 30B MoE with around 3B active parameters at inference, which is the secret to why it feels snappy on consumer GPUs.In practice, that means you can talk to Omni, have it see what you see, and get sub-250 ms replies in nine speaker languages while it quietly plans. It claims to understand 119 languages. When I pushed it in multilingual conversational settings it still code-switched unexpectedly (Chinese suddenly appeared mid-flow), and it occasionally suffered the classic “stuck in thought” behavior we’ve been seeing in agentic voice modes across labs. But the responsiveness is real, and the footprint is exciting for local speech streaming scenarios. I wouldn’t replace a top-tier text reasoner with this for hard problems, yet being able to keep speech native is a real UX upgrade.Qwen Image Edit, Qwen TTS Flash, and Qwen‑GuardQwen’s image stack got a handy upgrade with multi-image reference editing for more consistent edits across shots—useful for brand assets and style-tight workflows. TTS Flash (API-only for now) is their fast speech synth line, and Q‑Guard is a new safety/moderation model from the same team. It’s notable because Qwen hasn’t really played in the moderation-model space before; historically Meta’s Llama Guard led that conversation.DeepSeek V3.1 Terminus: a surgical bugfix that matters for agents (X, HF)DeepSeek whale resurfaced to push a small 0.1 update to V3.1 that reads like a “quality and stability” release—but those matter if you’re building on top. It fixes a code-switching bug (the “sudden Chinese” syndrome you’ll also see in some Qwen variants), improves tool-use and browser execution, and—importantly—makes agentic flows less likely to overthink and stall. On the numbers, Humanities Last Exam jumped from 15 to 21.7, while LiveCodeBench dipped slightly. That’s the story here: they traded a few raw points on coding for more stable, less dithery behavior in end-to-end tasks. If you’ve invested in their tool harness, this may be a net win.Liquid Nanos: small models that extract like they’re big (X, HF)Liquid Foundation Models released “Liquid Nanos,” a set of open models from roughly 350M to 2.6B parameters, including “extract” variants that pull structure (JSON/XML/YAML) from messy documents. The pitch is cost-efficiency with surprisingly competitive performance on information extraction tasks versus models 10× their size. If you’re doing at-scale doc ingestion on CPUs or small GPUs, these look worth a try.Tiny IBM OCR model that blew up the charts (HF)We also saw a tiny IBM model (about 250M parameters) for image-to-text document parsing trending on Hugging Face. Run in 8-bit, it squeezes into roughly 250 MB, which means Raspberry Pi and “toaster” deployments suddenly get decent OCR/transcription against scanned docs. It’s the kind of tiny-but-useful release that tends to quietly power entire products.Meta’s 32B Code World Model (CWM) released for agentic code reasoning (X, HF)Nisten got really excited about this one, and once he explained it, I understood why. Meta released a 32B code world model that doesn’t just generate code - it understands code the way a compiler does. It’s thinking about state, types, and the actual execution context of your entire codebase.This isn’t just another coding model - it’s a fundamentally different approach that could change how all future coding models are built. Instead of treating code as fancy text completion, it’s actually modeling the program from the ground up. If this works out, expect everyone to copy this approach.Quick note, this one was released with a research license only! Evals & Benchmarks: agents, deception, and code at scaleA big theme this week was “move beyond single-turn Q&A and test how these things behave in the wild.” with a bunch of new evals released. I wanted to cover them all in a separate segment. OpenAI’s GDP Eval: “economically valuable tasks” as a bar (X, Blog)OpenAI introduced GDP Eval to measure model performance against real-world, economically valuable work. The design is closer to how I think about “AGI as useful work”: 44 occupations across nine sectors, with tasks judged against what an industry professional would produce.Two details stood out. First, OpenAI’s own models didn’t top the chart in their published screenshot—Anthropic’s Claude Opus 4.1 led with roughly a 47.6% win rate against human professionals, while GPT‑5-high clocked in around 38%. Releasing a benchmark where you’re not on top earns respect. Second, the tasks are legit. One example was a manufacturing engineer flow where the output required an overall design with an exploded view of components—the kind of deliverable a human would actually make.What I like here isn’t the precise percent; it’s the direction. If we anchor progress to tasks an economy cares about, we move past “trivia with citations” and toward “did this thing actually help do the work?”GAIA 2 (Meta Super Intelligence Labs + Hugging Face): agents that execute (X, HF)MSL and HF refreshed GAIA, the agent benchmark, with a thousand new human-authored scenarios that test execution, search, ambiguity handling, temporal reasoning, and adaptability—plus a smartphone-like execution environment. GPT‑5-high led across execution and search; Kimi’s K2 was tops among open-weight entries. I like that GAIA 2 bakes in time and budget constraints and forces agents to chain steps, not just spew plans. We need more of these.Scale AI’s “SWE-Bench Pro” for coding in the large (HF)Scale dropped a stronger coding benchmark focused on multi-file edits, 100+ line changes, and large dependency graphs. On the public set, GPT‑5 (not Codex) and Claude Opus 4.1 took the top two slots; on a commercial set, Opus edged ahead. The broader takeaway: the action has clearly moved to test-time compute, persistent memory, and program-synthesis outer loops to get through larger codebases with fewer invalid edits. This aligns with what we’re seeing across ARC‑AGI and SWE‑bench Verified.The “Among Us” deception test (X)One more that’s fun but not frivolous: a group benchmarked models on the social deception game Among Us. OpenAI’s latest systems reportedly did the best job both lying convincingly and detecting others’ lies. This line of work matters because social inference and adversarial reasoning show up in real agent deployments—security, procurement, negotiations, even internal assistant safety.Big Companies, Bigger Bets!Nvidia’s $100B pledge to OpenAI for 10GW of computeLet’s say that number again: one hund

Hey folks, What an absolute packed week this week, which started with yet another crazy model release from OpenAI, but they didn't stop there, they also announced GPT-5 winning the ICPC coding competitions with 12/12 questions answered which is apparently really really hard! Meanwhile, Zuck took the Meta Connect 25' stage and announced a new set of Meta glasses with a display! On the open source front, we yet again got multiple tiny models doing DeepResearch and Image understanding better than much larger foundational models.Also, today I interviewed Jeremy Berman, who topped the ArcAGI with a 79.6% score and some crazy Grok 4 prompts, a new image editing experience called Reve, a new world model and a BUNCH more! So let's dive in! As always, all the releases, links and resources at the end of the article. ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.Table of Contents* Codex comes full circle with GPT-5-Codex agentic finetune* Meta Connect 25 - The new Meta Glasses with Display & a neural control interface* Jeremy Berman: Beating frontier labs to SOTA score on ARC-AGI* This Week’s Buzz: Weave inside W&B models—RL just got x-ray vision* Open Source* Perceptron Isaac 0.1 - 2B model that points better than GPT* Tongyi DeepResearch: A3B open-source web agent claims parity with OpenAI Deep Research* Reve launches a 4-in-1 AI visual platform taking on Nano 🍌 and Seedream* Ray3: Luma’s “reasoning” video model with native HDR, Draft Mode, and Hi‑Fi mastering* World models are getting closer - Worldlabs announced Marble* Google puts Gemini in ChromeCodex comes full circle with GPT-5-Codex agentic finetune (X, OpenAI Blog)My personal highlight of the week was definitely the release of GPT-5-Codex. I feel like we've come full circle here. I remember when OpenAI first launched a separate, fine-tuned model for coding called Codex, way back in the GPT-3 days. Now, they've done it again, taking their flagship GPT-5 model and creating a specialized version for agentic coding, and the results are just staggering.This isn't just a minor improvement. During their internal testing, OpenAI saw GPT-5-Codex work independently for more than seven hours at a time on large, complex tasks—iterating on its code, fixing test failures, and ultimately delivering a successful implementation. Seven hours! That's an agent that can take on a significant chunk of work while you're sleeping. It's also incredibly efficient, using 93% fewer tokens than the base GPT-5 on simpler tasks, while thinking for longer on the really difficult problems.The model is now integrated everywhere - the Codex CLI (just npm install -g codex), VS Code extension, web playground, and yes, even your iPhone. At OpenAI, Codex now reviews the vast majority of their PRs, catching hundreds of issues daily before humans even look at them. Talk about eating your own dog food!Other OpenAI updates from this weekWhile Codex was the highlight, OpenAI (and Google) also participated and obliterated one of the world’s hardest algorithmic competitions called ICPC. OpenAI used GPT-5 and an unreleased reasoning model to solve 12/12 questions in under 5 hours. OpenAI and NBER also released an incredible report on how over 700M people use GPT on a weekly basis, with a lot of insights, that are summed up in this incredible graph:Meta Connect 25 - The new Meta Glasses with Display & a neural control interfaceJust when we thought the week couldn't get any crazier, Zuck took the stage for their annual Meta Connect conference and dropped a bombshell. They announced a new generation of their Ray-Ban smart glasses that include a built-in, high-resolution display you can't see from the outside. This isn't just an incremental update; this feels like the arrival of a new category of device. We've had the computer, then the mobile phone, and now we have smart glasses with a display.The way you interact with them is just as futuristic. They come with a "neural band" worn on the wrist that reads myoelectric signals from your muscles, allowing you to control the interface silently just by moving your fingers. Zuck's live demo, where he walked from his trailer onto the stage while taking messages and playing music, was one hell of a way to introduce a product.This is how Meta plans to bring its superintelligence into the physical world. You'll wear these glasses, talk to the AI, and see the output directly in your field of view. They showed off live translation with subtitles appearing under the person you're talking to and an agentic AI that can perform research tasks and notify you when it's done. It's an absolutely mind-blowing vision for the future, and at $799, shipping in a week, it's going to be accessible to a lot of people. I've already signed up for a demo.Jeremy Berman: Beating frontier labs to SOTA score on ARC-AGIWe had the privilege of chatting with Jeremy Berman, who just achieved SOTA on the notoriously difficult ARC-AGI benchmark using checks notes... Grok 4! 🚀He walked us through his innovative approach, which ditches Python scripts in favor of flexible "natural language programs" and uses a program-synthesis outer loop with test-time adaptation. Incredibly, his method achieved these top scores at 1/25th the cost of previous systemsThis is huge because ARC-AGI tests for true general intelligence - solving problems the model has never seen before. The chat with Jeremy is very insightful, available on the podcast starting at 01:11:00 so don't miss it!ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.This Week’s Buzz: Weave inside W&B models—RL just got x-ray visionYou know how every RL project produces a mountain of rollouts that you end up spelunking through with grep? We just banished that misery. Weave tracing now lives natively inside every W&B Workspace run. Wrap your training-step and rollout functions in @weave.op, call weave.init(), and your traces appear alongside loss curves in real time. I can click a spike, jump straight to the exact conversation that tanked the reward, and diagnose hallucinations without leaving the dashboard. If you’re doing any agentic RL, please go treat yourself. Docs: https://weave-docs.wandb.ai/guides/tools/weave-in-workspacesOpen SourceOpen source did NOT disappoint this week as well, we've had multiple tiny models beating the giants at specific tasks! Perceptron Isaac 0.1 - 2B model that points better than GPT ( X, HF, Blog )One of the most impressive demos of the week came from a new lab, Perceptron AI. They released Isaac 0.1, a tiny 2 billion parameter "perceptive-language" model. This model is designed for visual grounding and localization, meaning you can ask it to find things in an image and it will point them out. During the show, we gave it a photo of my kid's Harry Potter alphabet poster and asked it to "find the spell that turns off the light." Not only did it correctly identify "Nox," but it drew a box around it on the poster. This little 2B model is doing things that even huge models like GPT-4o and Claude Opus can't, and it's completely open source. Absolutely wild.Moondream 3 preview - grounded vision reasoning 9B MoE (2B active) (X, HF)Speaking of vision reasoning models, just a bit after the show concluded, our friend Vik released a demo of Moondream 3, a reasoning vision model 9B (A2B) that is also topping the charts! I didn't have tons of time to get into this, but the release thread shows this to be an exceptional open source visual reasoner also beating the giants!Tongyi DeepResearch: A3B open-source web agent claims parity with OpenAI Deep Research ( X, HF )Speaking of smaller models obliterating huge ones, Tongyi released a bunch of papers and a model this week that can do Deep Research on the level of OpenAI, even beating it, with a Qwen Finetune with only 3B active parameters! With insane scores like 32.9 (38.3 in Heavy mode) on Humanity's Last Exam (OAI Deep Research gets 26%) and an insane 98.6% on SimpleQA, this innovative approach uses a lot of RL and synthetic data to train a Qwen model to find what you need. The paper is full of incredible insights into how to build automated RL environments to get to this level. AI Art, Diffusion 3D and VideoThis category of AI has been blowing up, we've seen SOTA week after week with Nano Banana then Seedream 4 and now a few more insane models.Tencent's Hunyuan released SRPO (X, HF, Project, Comparison X)(Semantic Relative Preference Optimization) which is a new method to finetune diffusion models quickly without breaking the bank. Also released a very realistic looking finetune trained with SRPO. Some of the generated results are super realistic, but it's more than just a model, there's a whole new method of finetuning here! Hunyuan also updated their 3D model and announced a full blown 3D studio that does everything from 3D object generation, meshing, texture editing & more. Reve launches a 4-in-1 AI visual platform taking on Nano 🍌 and Seedream (X, Reve, Blog)A newcomer, Reve has launched a comprehensive new AI visual platform bundling image creation, editing, remixing, creative assistant, and API integration, all aimed at making advanced editing as accessible, all using their own proprietary models. What stood out to me though, is the image editing UI, which allows you to select on your image exactly what you want to edit, write a specific prompt for that thing (change color, objects, add text etc') and then hit generate and their model takes into account all those new queues! This is way better than just ... text prompting the other models! Ray3: Luma’s “reasoning” video model with native HDR, Draft Mode, and Hi‑Fi mastering (X, Try It)Luma released the third iteration of their video model called Ray, and this one does

Hey Everyone, Alex here, thanks for being a subscriber! Let's get you caught up on this weeks most important AI news! The main thing you need to know this week is likely the incredible Image model that ByteDance released, that overshoots the (incredible image model from last 2 weeks) nano 🍌. ByteDance really outdid themselves on this one! But also, a video model with super fast generation, OpenAI rumor made Larry Ellison the richest man alive, ChatGPT gets MCP powers (under a flag you can enable) and much more! This week we covered a lot of visual stuff, so while the podcast format is good enough, it's really worth tuning in to the video recording to really enjoy the full show. ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.AI Art and DiffusionIt's rare for me to start the newsletter not on Open Source AI news, but hey, at least this way you know that I'm writing it and not some AI right? 😉ByteDance SeeDream 4 - 4K SOTA image generation and editing model with up to 6 reference images (Fal, Replicate)The level of detail on ByteDance's new model, has really made all the hosts on ThursdAI stop and go... huh? is this AI? Bytedance really outdid themselves with this image model that not only generates images, it also is a fully functional image editing with natural language model. It's a diffusion transformer, able to generate 2K and 4K images, fast (under 5 seconds?) while enabling up to 6 reference images to be provided for the generation. This is going to be incredible for all kinds of purposes, AI art, marketing etc'. The promt adherence is quite incredible, text is also crisp and sharp at those 2/4K resolutions. We created this image live on the show with it (using a prompt extended by another model)I then provided my black and white headshot and the above image and asked to replace me as a cartoon character, and it did, super quick, and even got my bomber jacket and the W&B logo on it in there! Notable, nothing else was changed in the image, showing just how incredible this one is for image editing. In you want enhanced realism, our friend FoFr from replicate, reminded us that using IMG_3984.CR2 in the prompt, will make the model show images that are closer to reality, even if they depict some incredibly unrealistic things, like a pack of lions forming his nicknameAdditional uses for this model are just getting discovered, and one user already noted that given this model outputs 4K resolution, it can be used as a creative upscaler for other model outputs. Just shove your photo from another AI in Seedream and ask for an upscale. Just be ware that creative upscalers change some amount of details in the generated picture. DecART AI Lucy 14B Redefines Video Generation speeds! If Seedteam blew my mind with images, Decart's Lucy 14B absolutely shattered my expectations for video generation speed. We're talking about generating 5-second videos from images in 6.5 seconds. That's almost faster than watching the video itself!This video model is not open source yet (despite them adding 14B to the name) but it's smaller 5B brother was open sourced. The speed to quality ratio is really insane here, and while Lucy will not generate or animate text or faces that well, it does produce some decent imagery, but SUPER fast. This is really great for iteration, as AI Video is like a roulette machine, you have to generate a lot of tries to see a good result. This paired with Seedream (which is also really fast) are a game changer in the AI Art world! So stoked to see what folks will be creating with these! Bonus Round: Decart's Real-Time Minecraft Mod for Oasis 2 (X)The same team behind Lucy also dropped Oasis 2.0, a Minecraft mod that generates game environments in real-time using diffusion models. I got to play around with it live, and watching Minecraft transform into different themed worlds as I moved through them was surreal.Want a steampunk village? Just type it in. Futuristic city? Done. The frame rate stayed impressively smooth, and the visual coherence as I moved through the world was remarkable. It's like having an AI art director that can completely reskin your game environment on demand. And while the current quality remains low res, if you consider where Stable Diffusion 1.4 was 3 years ago, and where Seedream 4 is now, and do the same extrapolation to Oasis, in 2-3 years we'll be reskinning whole games on the fly and every pixel will be generated (like Jensen loves to say!) OpenAI adds full MCP to ChatGPT (under a flag) This is huge, folks. I've been waiting for this for a while, and finally, OpenAI quietly added full MCP (Model Context Protocol) support to ChatGPT via a hidden "developer mode."How to Enable MCP in ChatGPTHere's the quick setup I showed during the stream:* Go to ChatGPT settings → Connectors* Scroll down to find "Developer Mode" and enable it* Add MCP servers (I used Rube.ai from Composio)* Use GPT-4o in developer mode to access your connectorsDuring the show, I literally had ChatGPT pull Nisten's last five tweets using the Twitter MCP connector. It worked flawlessly (though Nisten was a bit concerned about what tweets it might surface 😂).The implications are massive - you can now connect ChatGPT to GitHub, databases, your local files, or chain multiple tools together for complex workflows. As Wolfram pointed out though, watch your context usage - each MCP connector eats into that 200K limit.Big Moves: Investments and InfrastructureSpeaking of OpenAI, Let's talk money, because the stakes are getting astronomical. OpenAI reportedly has a $300 billion (!) deal with Oracle for compute infrastructure over five years, starting in 2027. That's not a typo - $60 billion per year for compute. Larry Ellison just became the world's richest person, and Oracle's stock shot up 40% on the news in just a few days! This has got to be one of the biggest compute deals the world has ever head of!The scale is hard to comprehend. We're talking about potentially millions of H100 GPUs worth of compute power. When you consider that most AI companies are still figuring out how to profitably deploy thousands of GPUs, this deal represents infrastructure investment at a completely different magnitude.Meanwhile, Mistral just became Europe's newest decacorn, valued at $13.8 billion after receiving $1.3 billion from ASML. For context, ASML makes the lithography machines that TSMC uses to manufacture chips for Nvidia. They're literally at the beginning of the AI chip supply chain, and now they're investing heavily in Europe's answer to OpenAI.Wolfram made a great point - we're seeing the emergence of three major AI poles: American companies (OpenAI, Anthropic), Chinese labs (Qwen, Kimi, Ernie), and now European players like Mistral. Each is developing distinct approaches and capabilities, and the competition is driving incredible innovation.Anthropic's Mea Culpa and Code InterpreterAfter weeks of users complaining about Claude's degraded performance, Anthropic finally admitted there were bugs affecting both Claude Opus and Sonnet. Nisten, who tracks these things closely, speculated that the issues might be related to running different quantization schemes on different hardware during peak usage times. We already reported last week that they admitted that "something was affecting intelligence" but this week they said they pinpointed (and fixed) 2 bugs realted to inference! They also launched a code interpreter feature that lets Claude create and edit files directly. It's essentially their answer to ChatGPT's code interpreter - giving Claude its own computer to work with. The demo showed it creating Excel files, PDFs, and documents with complex calculations. Having watched Claude struggle with file operations for months, this is a welcome addition.🐝 This Week's Buzz: GLM 4.5 on W&B and We're on Open Router!Over at Weights & Biases, we've got some exciting updates for you. First, we've added Zhipu AI's GLM 4.5 to W&B Inference! This 300B+ parameter model is an absolute beast for coding and tool use, ranking among the top open models on benchmarks like SWE-bench. We've heard from so many of you, including Nisten, about how great this model is, so we're thrilled to host it. You can try it out now and get $2 in free credits to start.And for all you developers out there, you can use a proxy like LiteLLM to run GLM 4.5 from our inference endpoint inside Anthropic's Claude Code if you're looking for a powerful and cheap alternative! Second, we're now on Open Router! You can find several of our hosted models, like GPT-4-OSS and DeepSeek Coder, on the platform. If you're already using Open Router to manage your model calls, you can now easily route traffic to our high-performance inference stack.Open Source Continues to ShineOpen Source LLM models took a bit of a break this week, but there were still interesting models! Baidu released ERNIE-4.5, a very efficient 21B parameter "thinking" MoE that only uses 3B active parameters per token. From the UAE, MBZUAI released K2-Think, a finetune of Qwen 2.5 that's showing some seriously impressive math scores. And Moonshot AI updated Kimi K2, doubling its context window to 256K and further improving its already excellent tool use and writing capabilities.Tencent released an update to HunyuanImage 2.1, which is a bit slow, but also generates 2K images and is decent at text. Qwen drops Qwen3-Next-80B-A3B (X, HF)In breaking news post the show (we were expecting this on the show itself), Alibaba folks dropped a much more streamlined version of the next Qwen, 80B parametes with only 3B active! They call this an "Ultra Sparse MOE" and it beats Qwen3-32B in perf, rivals Qwen3-235B in reasoning & long-context. This is quite unprecedented, as getting models as sparse as to work well takes a lot of effort and skill, but the Qwen folks delivered! ToolsWe wrapped with a quick shouto

Wohoo, hey ya’ll, Alex here,I'm back from the desert (pic at the end) and what a great feeling it is to be back in the studio to talk about everything that happened in AI! It's been a pretty full week (or two) in AI, with Coding agent space heating up, Grok entering the ring and taking over free tokens, Codex 10xing usage and Anthropic... well, we'll get to Anthropic. Today on the show we had Roger and Bhavesh from Nous Research cover the awesome Hermes 4 release and the new PokerBots benchmark, then we had a returning favorite, Kwindla Hultman Kramer, to talk about the GA of RealTime voice from OpenAI. Plus we got some massive funding news, some drama with model quality on Claude Code, and some very exciting news right here from CoreWeave aquiring OpenPipe! 👏 So grab your beverage of choice, settle in (or skip to the part that interests you) and let's take a look at the last week (or two) in AI! ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.Open Source: Soulful Models and Poker-Playing AgentsThis week did not disappoint as it comes to Open Source! Our friends at Nous Research released the 14B version of Hermes 4, after releasing the 405B and 70B versions last week. This company continues to excel in finetuning models for powerful, and sometimes just plain weird (in a good way) usecases. Nous Hermes 4 (14B, 70B, 405B) and the Quest for a "Model Soul" (X, HF)Roger and Bhavash from Nous came to announce the release of the smaller (14B) version of Hermes 4, and cover the last weeks releases of the larger 70B and 405B brothers. Hermes series of finetunes was always on our radar, as unique data mixes turned them into uncensored, valuable and creative models and unlocked a bunch of new use-cases. But the wildest part? They told us they intentionally stopped training the model not when reasoning benchmarks plateaued, but when they felt it started to "lose its model soul." They monitor the entropy and chaos in the model's chain-of-thought, and when it became too sterile and predictable, they hit the brakes to preserve that creative spark. This focus on qualities beyond raw benchmark scores is why Hermes 4 is showing some really interesting generalization, performing exceptionally well on benchmarks like EQBench3, which tests emotional and interpersonal understanding. It's a model that's primed for RL not just in math and code, but in creative writing, role-play, and deeper, more "awaken" conversations. It’s a soulful model that's just fun to talk to.Nous Husky Hold'em Bench: Can Your LLM Win at Poker? (Bench)As if a soulful model wasn't enough, the Nous team also dropped one of the most creative new evals I've seen in a while: Husky Hold'em Bench. We had Bhavesh, one of its creators, join the show to explain. This isn't a benchmark where the LLM plays poker directly. Instead, the LLM has to write a Python poker botfrom scratch, under time and memory constraints, which then competes against bots written by other LLMs in a high-stakes tournament. Very interesting approach, and we love creative benchmarking here at ThursdAI! This is a brilliant way to test for true strategic reasoning and planning, not just pattern matching. It's an "evergreen" benchmark that gets harder as the models get better. Early results are fascinating: Claude 4 Sonnet and Opus are currently leading the pack, but Hermes 4 is the top open-source model.More Open Source GoodnessThe hits just kept on coming this week. Tencent open-sourced Hunyuan-MT-7B, a translation model that swept the WMT2025 competition and rivals GPT-4.1 on some benchmarks. Having a small, powerful, specialized model like this is huge for anyone doing large-scale data translation for training or needing fast on-device capabilities.From Switzerland, we got Apertus-8B and 70B, a set of fully open (Apache 2.0 license, open data, open training recipes!) multilingual models trained on a massive 15 trillion tokens across 1,800 languages. It’s fantastic to see this level of transparency and contribution from European institutions.And Alibaba’s Tongyi Lab released WebWatcher, a powerful multimodal research agent that can plan steps, use a suite of tools (web search, OCR, code interpreter), and is setting new state-of-the-art results on tough visual-language benchmarks, often beating models like GPT-4o and Gemini.All links are in the TL;DR at the endBREAKING NEWS: Google Drops Embedding Gemma 308M (X, HF, Try It)Just as we were live on the show, news broke from our friends at Google. They've released Embedding Gemma, a new family of open-source embedding models. This is a big deal because they are tiny—the smallest is only 300M parameters and takes just 200MB to run—but they are topping the MTEB leaderboard for models under 500M parameters. For anyone building RAG pipelines, especially for on-device or mobile-first applications, having a small, fast, SOTA embedding model like this is a game-changer.It's so optimized for on device running that it can run fully in your browser on WebGPU, with this great example from our friend Xenova highlighted on the release blog! Big Companies, Big Money, and Big ProblemsIt was a rollercoaster week for the big labs, with massive fundraising, major product releases, and a bit of a reality check on the reliability of their services.OpenAI's GPT Real-Time Goes GA and gets an upgraded brain (X, Docs)We had the perfect guest to break down OpenAI's latest voice offering: Kwindla Kramer, founder of Daily and maintainer of the open-source PipeCat framework. OpenAI has officially taken its Realtime API to General Availability (GA), centered around the new gpt-realtime model.Kwindla explained that this is a true speech-to-speech model, not a pipeline of separate speech-to-text, LLM, and text-to-speech models. This reduces latency and preserves more nuance and prosody. The GA release comes with huge upgrades, including support for remote MCP servers, the ability to process image inputs during a conversation, and—critically for enterprise—native SIP integration for connecting directly to phone systems.However, Kwindla also gave us a dose of reality. While this is the future, for many high-stakes enterprise use cases, the multi-model pipeline approach is still more reliable. Observability is a major issue with the single-model black box; it's hard to know exactly what the model "heard." And in terms of raw instruction-following and accuracy, a specialized pipeline can still outperform the speech-to-speech model. It’s a classic jagged frontier: for the lowest latency and most natural vibe, GPT Real-Time is amazing. For mission-critical reliability, the old way might still be the right way for now.ChatGpt has branching! Just as I was about to finish writing this up, ChatGPT announced a new feature, and this one I had to tell you about! Finally you can branch chats in their interface, which is a highly requested feature! Branching seems to be live on the chat interface, and honestly, tiny but important UI changes like these are how OpenAI remains the best chat experience! The Money Printer Goes Brrrr: Anthropic's $13B RaiseLet's talk about the money. Anthropic announced it has raised an absolutely staggering $13 billion in a Series F round, valuing the company at $183 billion. Their revenue growth is just off the charts, jumping from a run rate of around $1 billion at the start of the year to over $5 billion by August. This growth is heavily driven by enterprise adoption and the massive success of Claude Code. It's clear that the AI gold rush is far from over, and investors are betting big on the major players. In related news, OpenAI is also reportedly raising $10 billion at a valuation of around $500 billion, primarily to allow employees to sell shares—a huge moment for the folks who have been building there for years.Oops... Did We Nerf Your AI? Anthropic's ApologyWhile Anthropic was celebrating its fundraise, it was also dealing with a self-inflicted wound. After days of users on X and other forums complaining that Claude Opus felt "dumber," the company finally issued a statement admitting that yes, for about three days, the model's quality was degraded due to a change in their infrastructure stack.Honestly, this is not okay. We're at a point where hundreds of thousands of developers and businesses rely on these models as critical tools. To have the quality of that tool change under your feet without any warning is a huge problem. It messes with people's ability to do their jobs and trust the platform. While it was likely an honest mistake in pursuit of efficiency, it highlights a fundamental issue with closed, proprietary models. You're at the mercy of the provider. It's a powerful argument for the stability and control that comes with open-source and self-hosted models. These companies need to realize that they are no longer just providing experimental toys; they're providing essential infrastructure, and that comes with a responsibility for stability and transparency.This Week's Buzz: CoreWeave Acquires OpenPipe! 🎉Super exciting news from the Weights & Biases and CoreWeave family - we've acquired OpenPipe! Kyle and David Corbett and their team are joining us to help build out the complete AI infrastructure stack from metal to model.OpenPipe has been doing incredible work on SFT and RL workflows with their open source ART framework. As Yam showed during the show, they demonstrated you can train a model to SOTA performance on deep research tasks for just $300 in a few hours - and it's all automated! The system can generate synthetic data, apply RLHF, and evaluate against any benchmark you specify.This fits perfectly into our vision at CoreWeave - bare metal infrastructure, training and observability with Weights & Biases, fine-tuning and RL with OpenPipe's tools, evaluation with Weave, and inference to serve it all. We're building t