The Mathematics of Training LLMs — with Quentin Anthony of Eleuther AI

Description

Invites are going out for AI Engineer Summit! In the meantime, we have just announced our first Actually Open AI event with Brev.dev and Langchain, Aug 26 in our SF HQ (we’ll record talks for those remote). See you soon (and join the Discord)!

Special thanks to @nearcyan for helping us arrange this with the Eleuther team.

This post was on the HN frontpage for 15 hours.

As startups and even VCs hoard GPUs to attract talent, the one thing more valuable than GPUs is knowing how to use them (aka, make GPUs go brrrr).

There is an incredible amount of tacit knowledge in the NLP community around training, and until Eleuther.ai came along you pretty much had to work at Google or Meta to gain that knowledge. This makes it hard for non-insiders to even do simple estimations around costing out projects - it is well known how to trade $ for GPU hours, but trading “$ for size of model” or “$ for quality of model” is less known and more valuable and full of opaque “it depends”. This is why rules of thumb for training are incredibly useful, because they cut through the noise and give you the simple 20% of knowledge that determines 80% of the outcome derived from hard earned experience.

Today’s guest, Quentin Anthony from EleutherAI, is one of the top researchers in high-performance deep learning. He’s one of the co-authors of Transformers Math 101, which was one of the clearest articulations of training rules of thumb. We can think of no better way to dive into training math than to have Quentin run us through a masterclass on model weights, optimizer states, gradients, activations, and how they all impact memory requirements.

The core equation you will need to know is the following:

Where C is the compute requirements to train a model, P is the number of parameters, and D is the size of the training dataset in tokens. This is also equal to τ, the throughput of your machine measured in FLOPs (Actual FLOPs/GPU * # of GPUs), multiplied by T, the amount of time spent training the model.

Taking Chinchilla scaling at face value, you can simplify this equation to be `C = 120(P^2)`.These laws are only true when 1000 GPUs for 1 hour costs the same as 1 GPU for 1000 hours, so it’s not always that easy to make these assumptions especially when it comes to communication overhead.

There’s a lot more math to dive into here between training and inference, which you can listen to in the episode or read in the articles.

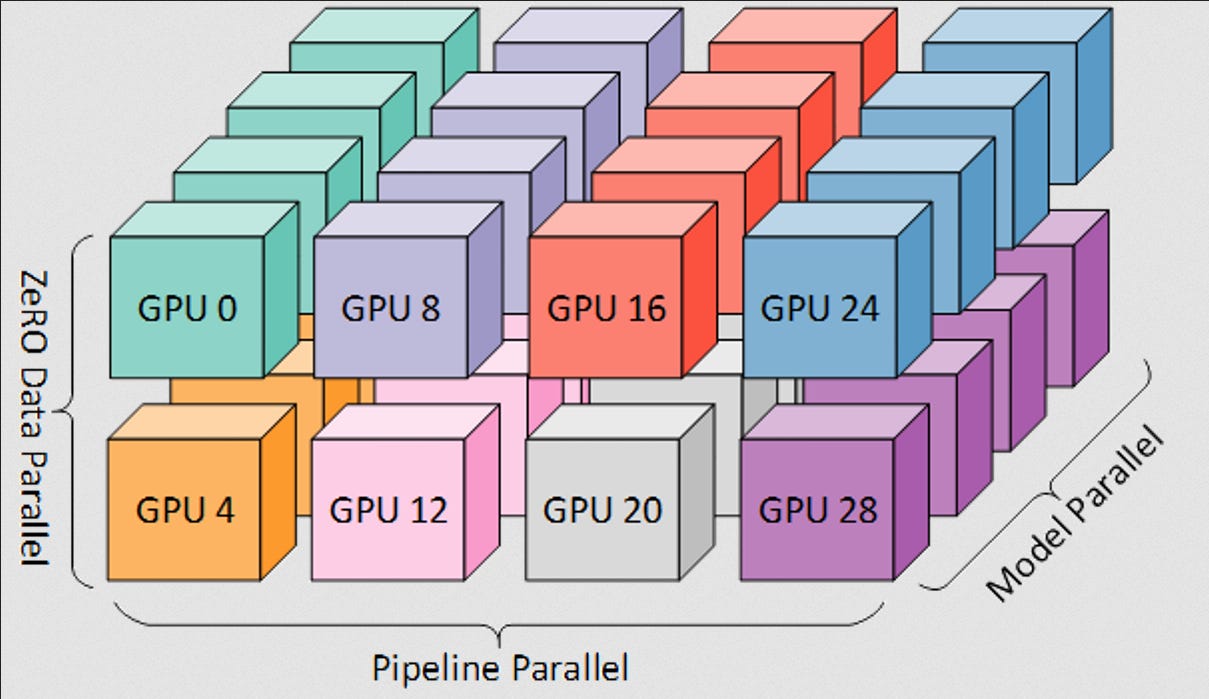

The other interesting concept we covered is distributed training and strategies such as ZeRO and 3D parallelism. As these models have scaled, it’s become impossible to fit everything in a single GPU for training and inference. We leave these advanced concepts to the end, but there’s a lot of innovation happening around sharding of params, gradients, and optimizer states that you must know is happening in modern LLM training.

If you have questions, you can join the Eleuther AI Discord or follow Quentin on Twitter.

Show Notes

* Transformers Math 101 Article

* BLOOM

* Mosaic

* Oak Ridge & Frontier Supercomputer

* RWKV

Timestamps

* [00:00:00 ] Quentin's background and work at Eleuther.ai

* [00:03:14 ] Motivation behind writing the Transformers Math 101 article

* [00:05:58 ] Key equation for calculating compute requirements (tau x T = 6 x P x D)

* [00:10:00 ] Difference between theoretical and actual FLOPs

* [00:12:42 ] Applying the equation to estimate compute for GPT-3 training

* [00:14:08 ] Expecting 115+ teraflops/sec per A100 GPU as a baseline

* [00:15:10 ] Tradeoffs between Nvidia and AMD GPUs for training

* [00:18:50 ] Model precision (FP32, FP16, BF16 etc.) and impact on memory

* [00:22:00 ] Benefits of model quantization even with unlimited memory

* [00:23:44 ] KV cache memory overhead during inference

* [00:26:08 ] How optimizer memory usage is calculated

* [00:32:03 ] Components of total training memory (model, optimizer, gradients, activations)

* [00:33:47 ] Activation recomputation to reduce memory overhead

* [00:38:25 ] Sharded optimizers like ZeRO to distribute across GPUs

* [00:40:23 ] Communication operations like scatter and gather in ZeRO

* [00:41:33 ] Advanced 3D parallelism techniques (data, tensor, pipeline)

* [00:43:55 ] Combining 3D parallelism and sharded optimizers

* [00:45:43 ] Challenges with heterogeneous clusters for distribution

* [00:47:58 ] Lightning Round

Transcription

Alessio: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO in Residence at Decibel Partners, and I'm joined by my co-host Swyx, writer and editor of Latent Space. [00:00:20 ]

Swyx: Hey, today we have a very special guest, Quentin Anthony from Eleuther.ai. The context for this episode is that we've been looking to cover Transformers math for a long time. And then one day in April, there's this blog post that comes out that literally is called Transformers Math 101 from Eleuther. And this is one of the most authoritative posts that I've ever seen. And I think basically on this podcast, we're trying to give people an intuition around what are the rules of thumb that are important in thinking about AI and reasoning by AI. And I don't think there's anyone more credible than the people at Eleuther or the people training actual large language models, especially on limited resources. So welcome, Quentin. [00:00:59 ]

Quentin: Thank you. A little bit about myself is that I'm a PhD student at Ohio State University, starting my fifth year now, almost done. I started with Eleuther during the GPT-NeoX20B model. So they were getting started training that, they were having some problems scaling it. As we'll talk about, I'm sure today a lot, is that communication costs and synchronization and how do you scale up a model to hundreds of GPUs and make sure that things progress quickly is really difficult. That was really similar to my PhD work. So I jumped in and helped them on the 20B, getting that running smoothly. And then ever since then, just as new systems challenges arise, and as they move to high performance computing systems and distributed systems, I just sort of kept finding myself falling into projects and helping out there. So I've been at Eleuther for a little bit now, head engineer there now, and then finishing up my PhD and then, well, who knows where I'll go next. [00:01:48 ]

Alessio: Awesome. What was the inspiration behind writing the article? Was it taking some of those learnings? Obviously Eleuther is one of the most open research places out there. Is it just part of the DNA there or any fun stories there? [00:02:00 ]

Quentin: For the motivation for writing, you very frequently see in like the DL training space, like these Twitter posts by like, for example, like Stas Bekman at Hugging Face, you'll see like a Twitter post that's like, oh, we just found this magic number and everything is like 20% faster. He’s super excited, but doesn't really understand what's going on. And the same thing for us, we very frequently find that a lot of people understand the theory or maybe the fundamentals of why like AI training or inference works, but no one knows like the nitty gritty details of like, how do you get inference to actually run correctly on your machine split across two GPUs or something like that. So we sort of had all of these notes that we had accumulated and we're sort of sharing among engineers within Eleuther and we thought, well, this would really help a lot of other people. It's not really maybe appropriate for lik