📆 ThursdAI - Qwen‑mas Strikes Again: VL/Omni Blitz + Grok‑4 Fast + Nvidia’s $100B Bet

Description

Hola AI aficionados, it’s yet another ThursdAI, and yet another week FULL of AI news, spanning Open Source LLMs, Multimodal video and audio creation and more!

Shiptember as they call it does seem to deliver, and it was hard even for me to follow up on all the news, not to mention we had like 3-4 breaking news during the show today!

This week was yet another Qwen-mas, with Alibaba absolutely dominating across open source, but also NVIDIA promising to invest up to $100 Billion into OpenAI.

So let’s dive right in! As a reminder, all the show notes are posted at the end of the article for your convenience.

ThursdAI - Because weeks are getting denser, but we’re still here, weekly, sending you the top AI content! Don’t miss out

Table of Contents

* Qwen3-VL Announcement (Qwen3-VL-235B-A22B-Thinking):

* Qwen3-Omni-30B-A3B: end-to-end SOTA omni-modal AI unifying text, image, audio, and video

* DeepSeek V3.1 Terminus: a surgical bugfix that matters for agents

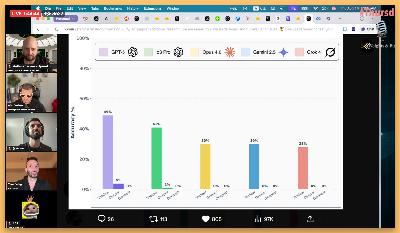

* Evals & Benchmarks: agents, deception, and code at scale

* OpenAI: ChatGPT Pulse: Proactive AI news cards for your day

* XAI Grok 4 fast - 2M context, 40% fewer thinking tokens, shockingly cheap

* Alibaba Qwen-Max and plans for scaling

* This Week’s Buzz: W&B Fully Connected is coming to London and Tokyo & Another hackathon in SF

* Vision & Video: Wan 2.2 Animate, Kling 2.5, and Wan 4.5 preview

* Moondream-3 Preview - Interview with co-founders Via & Jay

* Wan open sourced Wan 2.2 Animate (aka “Wan Animate”): motion transfer and lip sync

* Kling 2.5 Turbo: cinematic motion, cheaper and with audio

* Wan 4.5 preview: native multimodality, 1080p 10s, and lip-synced speech

* ThursdAI - Sep 25, 2025 - TL;DR & Show notes

Open Source AI

This was a Qwen-and-friends week. I joked on stream that I should just count how many times “Alibaba” appears in our show notes. It’s a lot.

Qwen3-VL Announcement (Qwen3-VL-235B-A22B-Thinking): (X, HF, Blog, Demo)

Qwen 3 launched earlier as a text-only family; the vision-enabled variant just arrived, and it’s not timid. The “thinking” version is effectively a reasoner with eyes, built on a 235B-parameter backbone with around 22B active (their mixture-of-experts trick). What jumped out is the breadth of evaluation coverage: MMU, video understanding (Video-MME, LVBench), 2D/3D grounding, doc VQA, chart/table reasoning—pages of it. They’re showing wins against models like Gemini 2.5 Pro and GPT‑5 on some of those reports, and doc VQA is flirting with “nearly solved” territory in their numbers.

Two caveats. First, whenever scores get that high on imperfect benchmarks, you should expect healthy skepticism; known label issues can inflate numbers. Second, the model is big. Incredible for server-side grounding and long-form reasoning with vision (they’re talking about scaling context to 1M tokens for two-hour video and long PDFs), but not something you throw on a phone.

Still, if your workload smells like “reasoning + grounding + long context,” Qwen 3 VL looks like one of the strongest open-weight choices right now.

Qwen3-Omni-30B-A3B: end-to-end SOTA omni-modal AI unifying text, image, audio, and video (HF, GitHub, Qwen Chat, Demo, API)

Omni is their end-to-end multimodal chat model that unites text, image, and audio—and crucially, it streams audio responses in real time while thinking separately in the background. Architecturally, it’s a 30B MoE with around 3B active parameters at inference, which is the secret to why it feels snappy on consumer GPUs.

In practice, that means you can talk to Omni, have it see what you see, and get sub-250 ms replies in nine speaker languages while it quietly plans. It claims to understand 119 languages. When I pushed it in multilingual conversational settings it still code-switched unexpectedly (Chinese suddenly appeared mid-flow), and it occasionally suffered the classic “stuck in thought” behavior we’ve been seeing in agentic voice modes across labs. But the responsiveness is real, and the footprint is exciting for local speech streaming scenarios. I wouldn’t replace a top-tier text reasoner with this for hard problems, yet being able to keep speech native is a real UX upgrade.

Qwen Image Edit, Qwen TTS Flash, and Qwen‑Guard

Qwen’s image stack got a handy upgrade with multi-image reference editing for more consistent edits across shots—useful for brand assets and style-tight workflows. TTS Flash (API-only for now) is their fast speech synth line, and Q‑Guard is a new safety/moderation model from the same team. It’s notable because Qwen hasn’t really played in the moderation-model space before; historically Meta’s Llama Guard led that conversation.

DeepSeek V3.1 Terminus: a surgical bugfix that matters for agents (X, HF)

DeepSeek whale resurfaced to push a small 0.1 update to V3.1 that reads like a “quality and stability” release—but those matter if you’re building on top. It fixes a code-switching bug (the “sudden Chinese” syndrome you’ll also see in some Qwen variants), improves tool-use and browser execution, and—importantly—makes agentic flows less likely to overthink and stall. On the numbers, Humanities Last Exam jumped from 15 to 21.7, while LiveCodeBench dipped slightly. That’s the story here: they traded a few raw points on coding for more stable, less dithery behavior in end-to-end tasks. If you’ve invested in their tool harness, this may be a net win.

Liquid Nanos: small models that extract like they’re big (X, HF)

Liquid Foundation Models released “Liquid Nanos,” a set of open models from roughly 350M to 2.6B parameters, including “extract” variants that pull structure (JSON/XML/YAML) from messy documents. The pitch is cost-efficiency with surprisingly competitive performance on information extraction tasks versus models 10× their size. If you’re doing at-scale doc ingestion on CPUs or small GPUs, these look worth a try.

Tiny IBM OCR model that blew up the charts (HF)

We also saw a tiny IBM model (about 250M parameters) for image-to-text document parsing trending on Hugging Face. Run in 8-bit, it squeezes into roughly 250 MB, which means Raspberry Pi and “toaster” deployments suddenly get decent OCR/transcription against scanned docs. It’s the kind of tiny-but-useful release that tends to quietly power entire products.

Meta’s 32B Code World Model (CWM) released for agentic code reasoning (X, HF)

Nisten got really excited about this one, and once he explained it, I understood why. Meta released a 32B code world model that doesn’t just generate code - it understands code the way a compiler does. It’s thinking about state, types, and the actual