Interviewing OLMo 2 leads: Open secrets of training language models

Description

We're here to share the story of building our Open Language Models (OLMos) and what we improved to build the OLMo 2 7B/13B model that is competitive with the Llama 3.1 8B model. This is all about building an effective, small language modeling team that can share all it learns with the scientific community. Dirk, Luca, and Kyle are some of the people I learn the most from and have more knowledge (and entertainment) to share than we have time.

Some questions were pulled from Twitter, but please comment or get in touch if you want us to cover anything in the future episode(s)!

Main topics:

* Pretraining efficiency and our quest for stability after a not-so-secret failed 70B run early in 2024,

* What the role of OLMo is in the broader AI landscape and how that is, or is not, changing,

* Many little decisions that going into building language models and their teams (with a focus on NOT post-training, given I already talk about that a ton).

Play with the models we build here: playground.allenai.org/

For more history of open language models (OLMos) on Interconnects, see my first post on OLMo, my coverage of OLMoE, OLMo 2, and why I build open language models. If you have more questions or requests, please let us know (especially the researchers out there) and this can be one of N, rather than a one off celebration.

Listen on Apple Podcasts, Spotify, YouTube, and where ever you get your podcasts. For other Interconnects interviews, go here.

Contacts

Dirk Groeneveld — https://x.com/mechanicaldirk // https://bsky.app/profile/mechanicaldirk.bsky.social

Kyle Lo — https://x.com/kylelostat // https://bsky.app/profile/kylelo.bsky.social

Luca Soldaini — https://twitter.com/soldni // https://bsky.app/profile/soldaini.net

General OLMo contact — olmo@allenai.org

Papers / models / codebases discussed

* OPT models and talk from Susan Zhang

* BLOOM

* C4: Boosting Large-scale Parallel Training Efficiency with C4: A Communication-Driven Approach

* Maximal Update Parametrization (muP) is from Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer

* Spike No More: Stabilizing the Pre-training of Large Language Models

* LLM360: Towards Fully Transparent Open-Source LLMs — Amber model

* A Pretrainer's Guide to Training Data: Measuring the Effects of Data Age, Domain Coverage, Quality, & Toxicity (Kyle said Hitchhikers)

* Fishing for Magikarp: Automatically Detecting Under-trained Tokens in Large Language Models

Chapters

Chapters: Here is a list of major topics covered in the podcast, with timestamps for when the discussion starts:

* [00:00:00 ] Introduction

* [00:02:45 ] Early history of the OLMo project

* [00:15:27 ] The journey to stability

* [00:25:00 ] The evolving role of OLMo and pretraining research

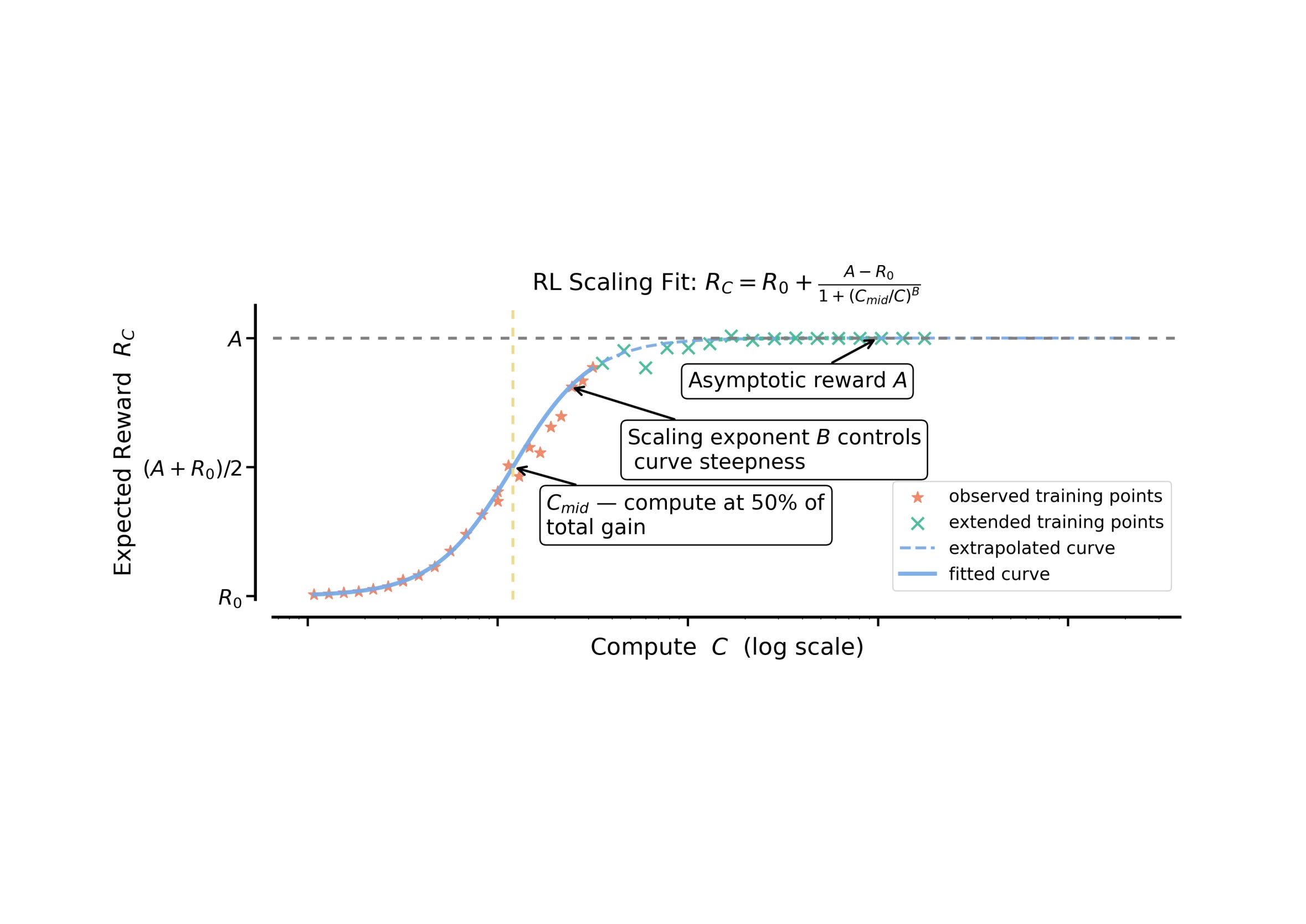

* [00:29:00 ] Pretraining Q&A (µP, scaling laws, MoE, etc.)

* [00:40:40 ] How to think about pretraining data work

* [00:54:30 ] Role of pre-training vs mid training vs post-training

* [01:02:19 ] Release strategy and wrapping up

Transcript

This is generated by AI and lightly edited for clarity. Particularly, the attribution per-speaker was poor on this time around.

Nathan Lambert [00:00:07 ]: Hey, welcome back to Interconnects. In this interview, we're bringing one that I've hinted at for a while, which is interviewing some of the other leads on the OLMo team at AI2. So essentially, this covers the story of OLMo from its early days where we got our compute, kind of our path to stability and some failed runs along the way, the role of OLMo and the broader AI ecosystem, and really just a very long tale of technical details and decision making and considerations that you have when actually training language models that you're trying to have at the frontier of performance relative to peers like Llama, etc. This is a fun one. There's less post-training than normal because this is me interviewing some other co-leads at the Allen Institute for AI. So there's three people in addition to me, which is Dirk Groeneveld, who is the lead of training, handles most of engineering, Kyle Lo, and Luca Soldaini, who are the data leads. So we have a pre-training engineering lead and two data leads with me who has done a lot of the post-training. This is just a part of the team. And I hope you enjoy this one. We can do more of these and bear with the fact that I'm still expanding my podcasting tech equipment. But I think the audio is definitely good enough and enjoy this episode with me, Kyle, Dirk, and Luca.

Hey, everyone. Welcome to the AI2 office. We're finally talking more about some of our OLMo things. Too much work to do to actually get all the... the information we want to share out into the world. So I'm here with Dirk, Kyle, and Luca. We can also talk so people identify your voices so people are not all on video. Hi, I'm Dirk.

Dirk Groeneveld [00:02:01 ]: I am the lead of the pre-training part of OLMo.

Kyle Lo: Hi, I'm Kyle. I work on data.

Luca Soldaini [00:02:08 ]: Hello, Luca. Also work on data with Kyle.

Nathan Lambert [00:02:13 ]: Okay, so we're kind of going to maybe go through some of the story of OLMo to start. And then just get into as many nerdy details until we get tired of OLMo 2. Which, in my state, this will probably be mostly about pre-training. You can ask me post-training questions as well. But I'm not going to sit here and be like, ask myself questions that I'm not going to answer. Because that is an absolutely ridiculous thing. You can ask me one question. Okay. One question. It's like, why shouldn't you post-training with all the compute?

Nathan Lambert [00:02:45 ]: But I wasn't here for when OLMo actually started. So I think it'd be good to tell people, I mean, like, broadly what AI2 was like at the time, what language modeling was like at the time, what it may or may not have been risky.

Kyle Lo [00:03:01 ]: Yeah, you should probably get this.

Dirk Groeneveld [00:03:03 ]: Yeah, I think it all started in the fall of 2022.

Dirk Groeneveld [00:03:10 ]: We were talking to AMD at the time about some sort of collaboration. We're scoping out some stuff. And at the time, we wanted to take the Bloom model. And put 300 billion extra tokens in. And we wrote up a proposal and we sent it to AMD and it disappeared into a black hole. And we never heard from them again. And then ChatGPT came out a couple months after that. And suddenly everybody was very excited. And two, maybe one month after that, AMD came back to us and said, now let's do it. And that kicked off a very busy period for us. At least the three of us were involved at the time. Plus some of us. Some more people trying to scope out exactly what the project would be. Putting 300 billion tokens into Bloom wasn't that cool anymore. The field had moved on. So we needed to find something else that would work both for us and for AMD.

Dirk Groeneveld [00:04:07 ]: And that's exactly what we d