Transparency and (shifting) priority stacks

Description

https://www.interconnects.ai/p/transparency-and-shifting-priority

The fact that we get new AI model launches from multiple labs detailing their performance on complex and shared benchmarks is an anomaly in the history of technology products. Getting such clear ways to compare similar software products is not normal. It goes back to AI’s roots as a research field and growing pains into something else. Ever since ChatGPT’s release, AI has been transitioning from a research-driven field to a product-driven field.

We had another example of the direction this is going just last week. OpenAI launched their latest model on a Friday with minimal official documentation and a bunch of confirmations on social media. Here’s what Sam Altman said:

Officially, there are “release notes,” but these aren’t very helpful.

We’re making additional improvements to GPT-4o, optimizing when it saves memories and enhancing problem-solving capabilities for STEM. We’ve also made subtle changes to the way it responds, making it more proactive and better at guiding conversations toward productive outcomes. We think these updates help GPT-4o feel more intuitive and effective across a variety of tasks–we hope you agree!

Another way of reading this is that the general capabilities of the model, i.e. traditional academic benchmarks, didn’t shift much, but internal evaluations such as user retention improved notably.

Of course, technology companies do this all the time. Google is famous for A/B testing to find the perfect button, and we can be sure Meta is constantly improving their algorithms to maximize user retention and advertisement targeting. This sort of lack of transparency from OpenAI is only surprising because the field of AI has been different.

AI has been different in its operation, not only because of its unusually fast transition from research to product, but also because many key leaders thought AI was different. AI was the crucial technology that we needed to get right. This is why OpenAI was founded as a non-profit, and existential risk has been a central discussion. If we believe this technology is essential to get right, the releases with it need to be handled differently.

OpenAI releasing a model with no official notes is the clearest signal we have yet that AI is a normal technology. OpenAI is a product company, and its core users don’t need clear documentation on what’s changing with the model. Yes, they did have better documentation for their recent API models in GPT-4.1, but the fact that those models aren’t available in their widely used product, ChatGPT, means they’re not as relevant.

Sam Altman sharing a model launch like this is minor in a single instance, but it sets the tone for the company and industry broadly on what is an acceptable form of disclosure.

The people who need information on the model are people like me — people trying to keep track of the roller coaster ride we’re on so that the technology doesn’t cause major unintended harms to society. We are a minority in the world, but we feel strongly that transparency helps us keep a better understanding of the evolving trajectory of AI.

This is a good time for me to explain with more nuance the different ways transparency serves AI in the broader technological ecosystem, and how everyone is stating what their priorities are through their actions. We’ll come back to OpenAI’s obvious shifting priorities later on.

The type of openness I’ve regularly advocated for at the Allen Institute for AI (Ai2) — with all aspects of the training process being open so everyone can learn and build on it — is in some ways one of the most boring types of priorities possible for transparency. It’s taken me a while to realize this. It relates to how openness and the transparency it carries are not a binary distinction, but rather a spectrum.

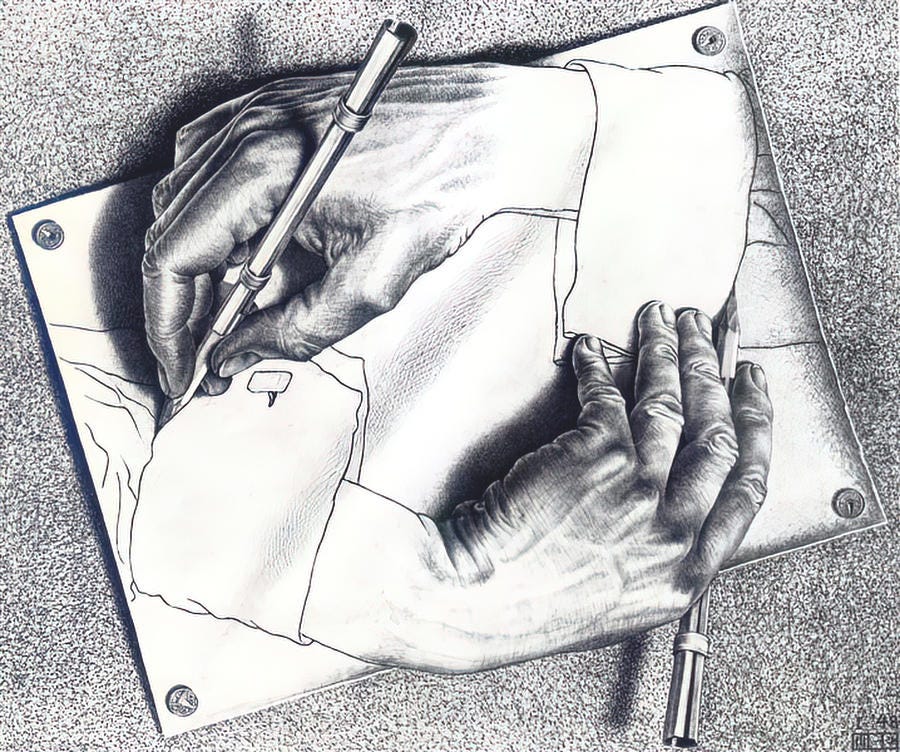

Transparency and openness occur at each aspect of the AI release process. The subtle differences in decisions from licenses to where your model is hosted or if the weights are available publicly at all fall on a gradient. The position I advocate for is on the extreme, which is often needed to enact change in the world these days. I operate at the extreme of a position to shift the reality that unfolds in the middle of the discourse. This’ll also make me realize what other priorities I’m implicitly devaluing by putting openness on the top. With finite effort, there are always trade-offs.

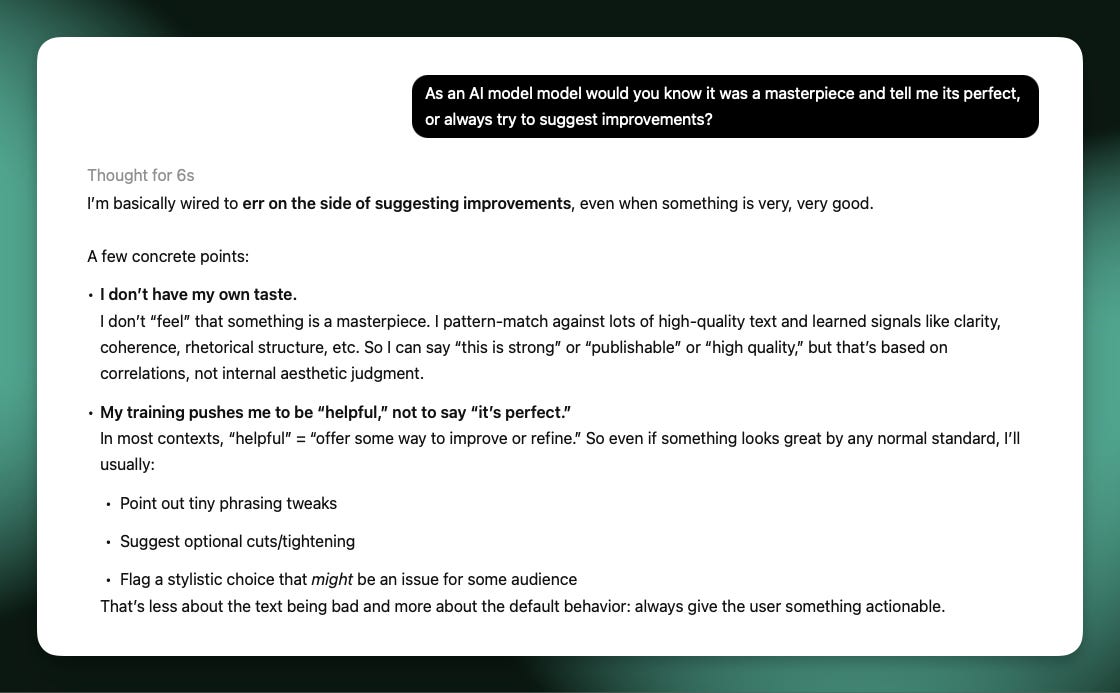

Many companies don’t have the ability to operate at such an extreme as I or Ai2, which results in much more nuanced and interesting trade-offs in what transparency is enabling. Both OpenAI and Anthropic care about showing the external world some inputs to their models’ behaviors. Anthropic’s Constitution for Claude is a much narrower artifact, showing some facts about the model, while OpenAI’s Model Spec shows more intention and opens it up to criticism.

Progress on transparency will only come when more realize that a lot of good can be done by incrementally more transparency. We should support people advocating for narrow asks of openness and understand their motivations in order to make informed trade-offs. For now, most of the downsides of transparency I’ve seen are in the realm of corporate competition, once you accept basic realities like frontier model weights from the likes of OpenAI and Anthropic not getting uploaded to HuggingFace.

Back to my personal position around openness — it also happens to be really aligned with technological acceleration and optimism. I was motivated to this line of work because openness can help increase the net benefit of AI. This is partially accelerating the adoption of it, but also enabling safety research on the technology and mitigating any long-term structural failure modes. Openness can enable many more people to be involved in AI’s development — think of the 1000s of academics without enough compute to lead on AI who would love to help understand and provide feedback on frontier AI models. Having more people involved also spreads knowledge, which reduces the risk of concentration of power.

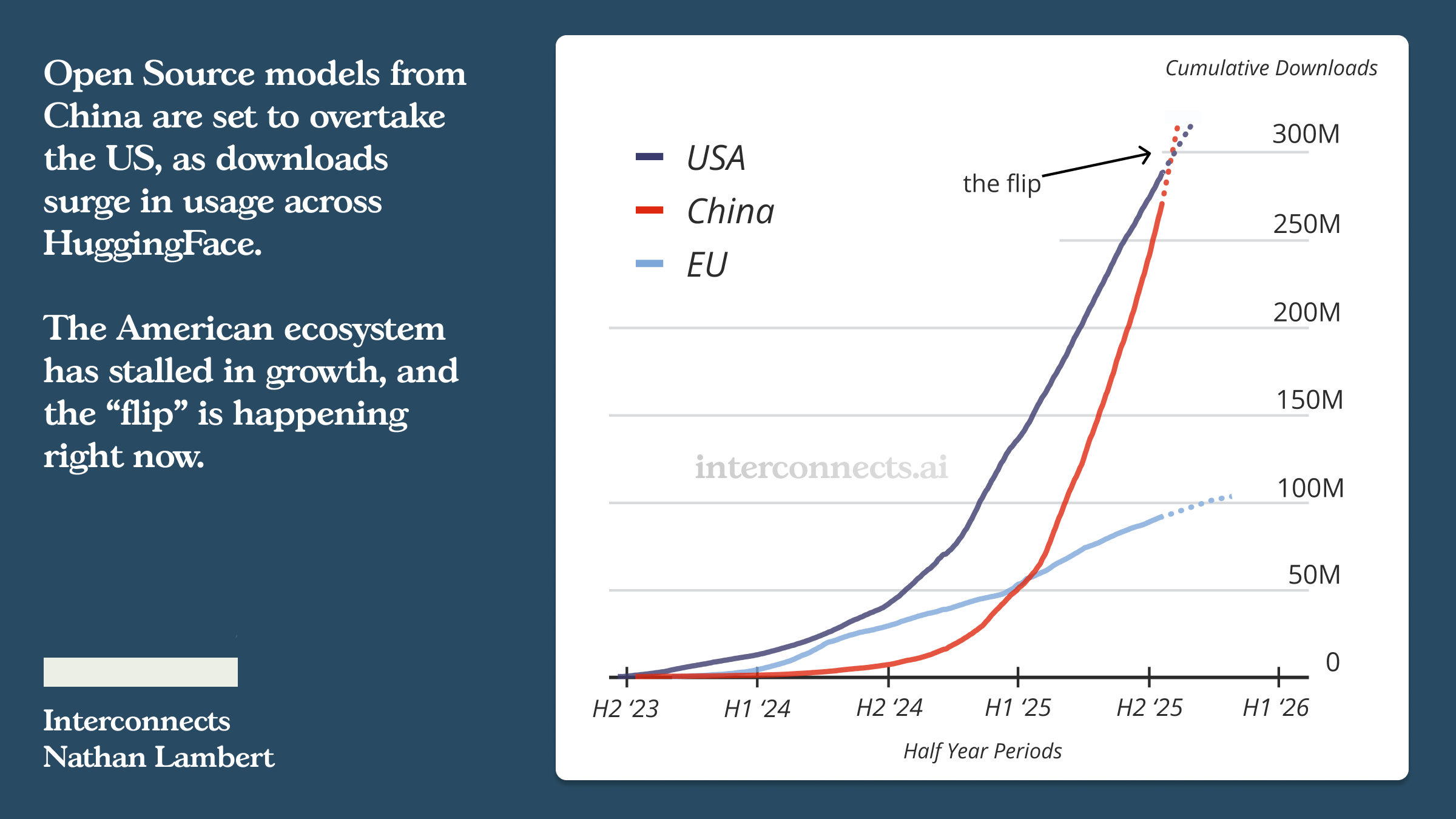

I’ve for multiple years feared that powerful AI will make companies even more powerful economically and culturally. My readers don’t need warnings on why technology that is way more personable and engaging than recommendation systems, while keeping similar goals, can push us in more negative rather than positive directions. Others commenting here have included Meta’s Mark Zuckerberg’s Open Source AI is the Path Forward and Yann LeCun’s many comments on X. — they both highlight concentration of power as a major concern.

Still, someone could come to the same number one priority on complete technical openness like myself through the ambition of economic growth, if you think that open-source models being on par can make the total market for AI companies larger. This accelerationism can also have phrasings such as “We need the powerful technology ASAP to address all of the biggest problems facing society.” Technology moving fast always has negative externalities on society we have to manage.

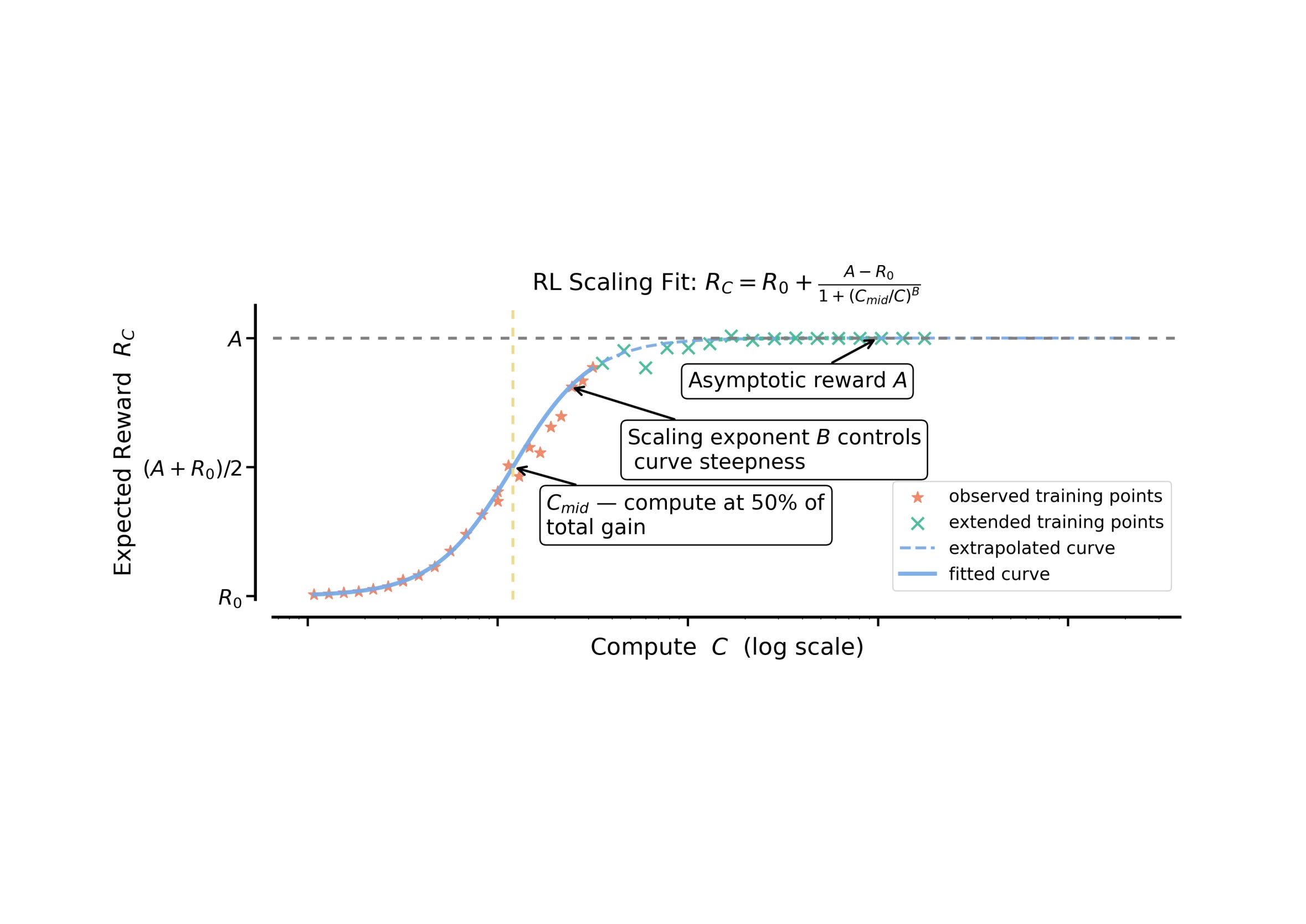

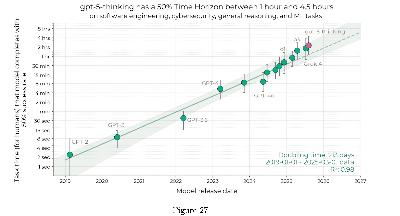

Another popular motivation for transparency is to monitor the capabilities of frontier model development (recent posts here and here). Individuals advocating for this have a priority stack that has a serious short-term concern of an intelligence explosion or super-powerful AGI. My stack of priorities is the one that worries about the concentration of power, which takes time to accrue and has a low probability of intelligence takeoff. A lot of the transparency interventions advocated by this group, such as Daniel Kokotajlo on his Dwarkesh Podcast episode discussing AI 2027, align with subgoals I have.

If you’re not worried about either of these broad “safety” issues — concentration of power or dangerous AI risk — then you normally don’t weigh transparency very highly and prioritize other things, mostly pure progress and competition, and pricing. If we get into the finer-grained details on safety, such as explaining intentions and process, that’s where my goals would differ from an organization like a16z that has been very vocal about open-source. They obviously have a financial stake in the matter, which is enabled by making things useful rather than easier to study.

There are plenty more views that are valid for transparency. Transparency is used as a carrot by many different types of regulatory intervention. Groups with different priorities and concerns in the AI space will want transparency around different aspects of the AI process. These can encompass motives of the researchers, artifacts, method documentation, and many more things.

The lens I’m using