Ben Golub: AI Referees, Social Learning, and Virtual Currencies

Description

In this episode, we sit down with Ben Golub, economist at Northwestern University, to talk about what happens when AI meets academic research, social learning, and network theory.

We start with Ben’s startup Refine, an AI-powered technical referee for academic papers. From there, the conversation ranges widely: how scholars should think about tooling, why “slop” is now cheap, how eigenvalues explain viral growth, and what large language models might do to collective belief formation. We get math, economics, startups, misinformation, and even cow tipping.

Links & References

* Refine — AI referee for academic papers

* Harmonic — Formal verification and proof tooling for mathematics

* Matthew O. Jackson — Stanford economist and leading scholar of networks and social learning

* Cow tipping (myth) — Why you can’t actually tip a cow (physics + folklore)

* The Hype Machine — Sinan Aral on how social platforms amplify misinformation

* Sequential learning / information cascades / DeGroot Model

* AI Village — Multi-agent AI simulations and emergent behavior experiments

* Virtual currencies & Quora credits — Internal markets for attention and incentives

Transcript:

Seth: Welcome to Justified Posteriors, the podcast that updates its beliefs about the economics of AI and technology.

Seth: I’m Seth Benzel, hoping my posteriors are half as good as the average of my erudite Friends is coming to you from Chapman University in sunny Southern California.

Andrey: And I’m Andrey Fradkin coming to you from San Francisco, California, and I’m very excited that our guest for today is Ben Goleb, who is a prominent economist at Northwestern University. Ben has won the Calvó-Armengol International Prize, which recognizes a top researcher in economics or social science, younger than 40 years old, for contributions to theory and comprehension of mechanisms of social interaction.

Andrey: So you want someone to analyze your social interactions, Ben is definitely the guy.

Seth: If it’s in the network,

Andrey: Yeah, he is, he was also a member of the Harvard Society of Fellows and had a brief stint working as an intern at Quora, and we’ve known each other for a long time. So welcome to the show, Ben.

Ben: Thank you, Andrey. Thank you, Seth. It’s wonderful to be on your podcast.

Refine: AI-Powered Paper Reviewing

Andrey: All right. Let’s get started. I want us to get started on what’s very likely been the most on your mind thing, Ben, which is your new endeavor, Refine.Ink. Why don’t you tell us a little bit about, give us the three minute spiel about what you’re doing.

Seth: and tell us why you didn’t name your tech startup after a Lord of the Rings character.

Ben: Man, that’s a curve ball right there. All right, I’ll tell you what, I’ll put that on background processing. So, what refine is, is it’s an AI referee technical referee. From a user perspective, what happens is you just give it a paper and you get the experience of a really obsessive research assistant reading for as long as it takes to get through the whole thing, probing it from every angle, asking every lawyerly question about whether things make sense.

Ben: And then that feedback, hopefully the really valuable parts that an author would wanna know are distilled and delivered. So as my co-founder Yann Calvó López puts it, obsession is really the obsessiveness is the nature of the company. We just bottled it up and we give it to people. So that’s the basic product—it’s an AI tool. It uses AI obviously to do all of this thinking. One thing I’ll say about it is that I have long felt it was a scandal that the level of tooling for scholars is a tiny fraction of what it is for software engineers.

Ben: And obviously software engineering is a much larger and more economically valuable

Seth: Boo.

Ben: least

Andrey: Oh, disagree.

Ben: In certain immediate quantifications. But I felt that ever since I’ve been using tech, I just felt imagine if we had really good tools and then there was this perfect storm where my co-founder and I felt we could make a tool that was state of the art for now. So that’s how I think of it.

Seth: I have to quibble with you a little bit about the user experience because the way I went, the step zero was first, jaw drops to the floor at the sticker price. How much do you,

Ben: not,

Seth: But then I will say I have used it myself and on a paper I recently submitted, it really did find a technical error and I would a kind of error that you wouldn’t find, just throwing this into ChatGPT as of a few months ago. Who knows with the latest Gemini. But it really impressed me with my limited time using it.

Andrey: So.

Ben: is probably, if you think about the sticker price, if you compare that to the amount of time you’d have, you’d have had to pay error.

Seth: Yeah. And water. If I didn’t have water, I’d die, so I should pay a million for water.

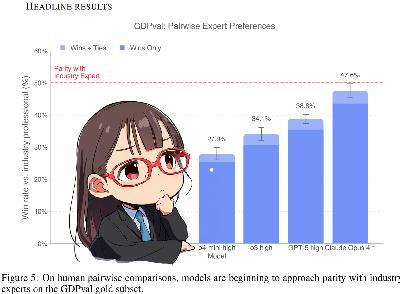

Andrey: A question I had: how do you know it’s good? Isn’t this whole evals thing very tricky?

Seth: Hmm.

Andrey: Is there Is there, a paper review or benchmark that you’ve come across, or did you develop your own?

Ben: Yeah. That’s a wonderful question. As Andrey knows, he’s a super insightful person about AI and this goes to the core of the issue because all the engineers we work with are immediately like, okay, I get what you’re doing.

Ben: Give me the evals, give me the standard of quality. So we know we’re objectively doing a good job. What we have are a set of papers where we know what ground truth is. We basically know everything that’s wrong with them and every model update we run, so that’s a small set of fairly manual evaluations that’s available. I think one of the things that users experience is they know their own papers well and can see over time that sometimes we find issues that they know about and then sometimes we find other issues and we can see whether they’re correct.

Ben: We’re not at the point where we can make confident precision recall type assessments. But another thing that we do, which I find cool, was whenever tools that our competitors come out, like Andrew Ng put out a cool paper reviewer thing targeted at CS conferences.

Ben: And what we do is we just run that thing, we run our thing, we put both of them into Gemini 2.0, and we say, could you please assess these side by side as reviews of the same paper? Which one caught mistakes? We try to make it a very neutral prompt, and that’s an eval that is easy to carry out.

Ben: But actually we’re in the market. We’d love to work with people who are excited about doing this for refine. We finally have the resources to take a serious run at it as founders. The simple truth is because my co-founder and I are researchers as well as founders, we constantly look at how it’s doing on documents we know.

Ben: And it’s a very seat of the pants thing for now, to tell the truth.

Andrey: Do you think that there’s an aspect of data-driven here and that one of your friends puts their paper into it and says, well, you didn’t catch this mistake, or you didn’t catch that mistake, and then you optimize towards that. Is that a big part of your development process?

Ben: Yeah, it was more. I think we’ve reached an equilibrium where of the feedback of that form we hear, there’s usually a cost to catching it. But early on that was basically, I would just tell everyone I could find, and there were a few. When I finally had the courage to tell my main academic group chat about it and I gave it, immediately people had very clear feedback and this was in the deep, I think the first reasoning model we used for the substantive feedback was DeepSeek R1 and people, we immediately felt, okay, this is 90% slop.

Ben: And that’s where we started by iterating. We got to where, and one great thing about having academic friends is they’re not gonna be shy to tell you that your thought of paper.

Refereeing Math and AI for Economic Theory

Andrey: One thing that we wanted to dig a little bit into is how you think about refereeing math and

Seth: Mm-hmm.

Andrey: More generally opening it up to how are economic theorists using AI for math?

Ben: So say a little more about your question. When you say math

Seth: Well, we see people, Axiom, I think is the name of the company, immediately converting these written proofs into Lean. Is that the end game for your tool?

Ben: I see, yes. So good. Our vision for the company is that, at least for quite a while, I think there’s gonna be this product layer between tools, the core AI models and the things that are necessary to bring your median, ambitious

Seth: Middle

Ben: not

Seth: theorists, that’s what we call ourselves.

Ben: Well, yeah. Or middle, but in a technical dimension, I think it’s almost certainly true that the median economist doesn’t use GitHub almost ever. If you told them, they set u