Claude Just Refereed the Anthropic Economic Index

Description

In this episode of Justified Posteriors, we dive into the paper "Which Economic Tasks Are Performed with AI: Evidence from Millions of Claude Conversations." We analyze Anthropic's effort to categorize how people use their Claude AI assistant across different economic tasks and occupations, examining both the methodology and implications with a critical eye.

We came into this discussion expecting coding and writing to dominate AI usage patterns—and while the data largely confirms this, our conversation highlights several surprising insights. Why are computer and mathematical tasks so heavily overrepresented, while office administrative work lag behind? What explains the notably low usage for managerial tasks, despite AI's apparent suitability for scheduling and time management?

We raise questions about the paper's framing: Is a gamer asking for help with their crashing video game really engaging in "economic activity"? How much can we learn from analyzing four million conversations when only 150 were human-verified? And what happens when different models specialize—are people going to Claude for coding but elsewhere for art generation?

We also asked Claude itself to review this paper about Claude usage, revealing some surprisingly pointed critiques from the AI about the paper's fundamental assumptions.

Throughout the episode, we balance our appreciation for this valuable descriptive work with thoughtful critiques, ultimately suggesting directions for future research that could better connect what people currently use AI for with its potential economic impact. Whether you're interested in AI adoption, labor economics, or just curious about how people are actually using large language models today, we offer our perspectives as economists studying AI's integration into our economy.

Join us as we update our beliefs about what the Anthropic Economic Index actually tells us—and what it doesn't—about the future of AI in economic tasks. The full transcript is available at the end of this post.

The episode is sponsored by the Digital Business Institute at Boston University’s Questrom School of Business. Big thanks to Chih-Ting (Karina) Yang for her help editing the episode.

-

🔗 Links to the paper for this episode’s discussion:

Which Economic Tasks are Performed with AI? Evidence from Millions of Claude Conversations

GPTs are GPTs: Labor market impact potential of LLMs

🗞️ Subscribe for upcoming episodes, post-podcast notes, and Andrey’s posts:

💻 Follow us on Twitter:

@AndreyFradkin https://x.com/andreyfradkin?lang=en

@SBenzell https://x.com/sbenzell?lang=en

Transcript

Seth: Welcome to the Justified Posteriors Podcast. The podcast that updates beliefs about the economics of AI and technology. I'm Seth Benzel with nearly half of my total output constituting software development and writing tasks coming to you from Chapman University in sunny Southern California.

Andrey: And I'm Andrey Fradkin, enjoying playing around with Claude 3.7 coming to you from Cambridge, Massachusetts.

Seth: So Andrey, what's the last thing you used AI for?

Andrey: The last thing I use AI for, well, it's a great question, Seth, because I was so excited about the new Anthropic model that I decided to test run it by asking it to write a referee report about the paper we are discussing today.

Seth: Incredible. It's a little bit meta, I would say, given the topic of the paper. Maybe we can hold in our back pockets the results of that experiment for later. What do you think?

Andrey: Yeah, I think we don't want to spoil the mystery about how Claude reviewed the work of its creators.

Seth: Claude reviewing the work of its creators - can Frankenstein's monster judge Frankenstein? Truly. So Andrey, maybe we've danced around this a little bit, but why don't you tell me what's the name of today's paper?

Andrey: The name of the paper is a bit of a mouthful: "Which Economic Tasks Are Performed with AI: Evidence from Millions of Claude Conversations." But on a more easy-to-explain level, the paper is introducing the Anthropic Economic Index, which is a measure of how people use the Claude chatbot, demonstrating how it can be useful in a variety of interesting ways for thinking about what people are using AI for.

Seth: Right. So at a high level, this paper is trying to document what people are using Claude for. I was also perplexed about the fact that they refer to this paper as an AI index given that an index usually means a number, and it's unclear what is the one number they want you to take away from this analysis. But that doesn't mean they don't give you a lot of interesting numbers over the course of their analysis of how people are using Claude.

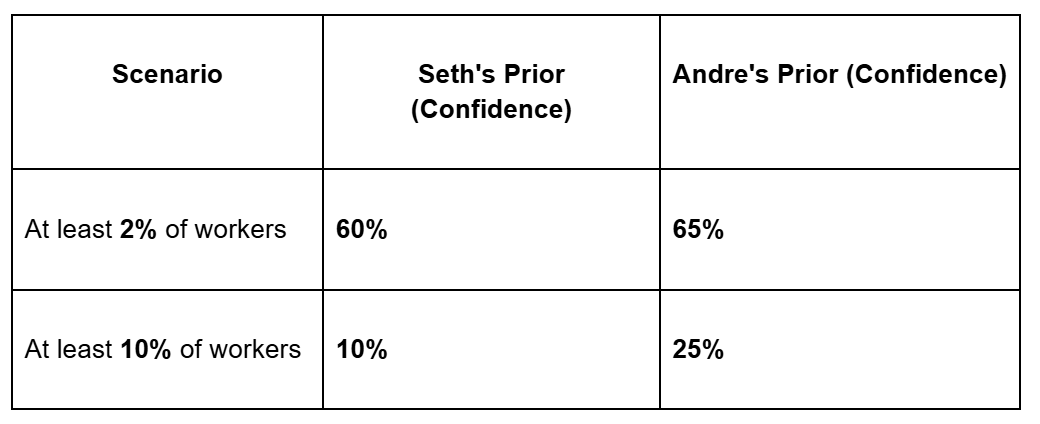

Andrey: So before we get into the paper a bit more, let's talk about the narrow and broad claims and what our priors are. The narrow claim is maybe what specifically are people using Claude for. Do we think this is a representative description of the actual truth? The authors divide up the analysis in many different ways, but one way to think about it is: is it true that the primary uses of this chatbot are computer and mathematical tasks? And is it also true that relatively few people use the chatbot for office and administrative support as well as managerial decision making?

Seth: Those are excellent questions. The first question is what are people using Claude for right now? And do we buy that the way they're analyzing the usage data gives us an answer to that question? Before I answer whether I think Claude's approach in analyzing their own chats is appropriate, let me tell you what my sense was coming in. If you had asked "What are people using chatbots for right now?" I would have guessed: number one, they're using it for doing their homework instead of actually learning the material, and number two, actual computer programmers are using it to speed up their coding. It can be a great coding assistant for speeding up little details.

Although homework wasn't a category analyzed by Claude, they do say that nearly half of the tasks they see people using these AI bots for are either some form of coding and software development or some form of writing. And of course, writing could be associated with tasks in lots of different industries, which they try to divide up. If you told me that half of what people use chatbots for is writing help and coding help - if anything, I would have thought that's on the low side. To me, that sounds like 80 percent of use cases.

Andrey: I think I'd say I'm with you. I think we probably agree on our priors. I'd say that most of the tasks I would expect to be done with the chatbot might be writing and programming related. There's a caveat here, though - there's a set of behaviors using chatbots for entertainment's sake. I don't know how frequent that is, and I don't know if I would put it into writing or something else, but I do know there is a portion of the user base that just really likes talking to Claude, and I don't know where that would be represented in this dataset.

Seth: Maybe we'll revisit this question when we get to limitations, but I think one of the limitations of this work is they're trying to fit every possible usage of AI into this government list of tasks that are done in the economy. But I've been using AI for things that aren't my job all the time. When America came up with this O*NET database of tasks people do for their jobs, I don't think they ever pretended for this to be a list of every task done by everyone in America. It was supposed to be a subset of tasks that seem to be economically useful or important parts of jobs that are themselves common occupations. So there are some limitations to this taxonomical approach right from the start.

Coming back to your point about people playing around with chatbots instead of using them for work - I have a cousin who loves to get chatbots to write slightly naughty stories, and then he giggles. He finds this so amusing! Presumably that's going to show up in their data as some kind of creative writing task.

Andrey: Yeah.

Seth: So moving from the question of what we think people are using chatbots for - where I think we share this intuition that it's going to be overwhelmingly coding and writing - now we go to this next question you have, which is: to what extent can we just look at conversations people have with chatbots and translate the number of those conversations or what sort of things they talk about into a measure of how people are going to usefully be integrating AI into the economy? There seems to be a little bit of a step there.

Andrey: I don't think the authors actually make the claim that this is a map of where the impact is going to be. I think they mostly just allude to the fact that this is a really useful system for real-time tracking of what the models are being used for. I don't think the authors would likely claim that this is a sign of what's to come necessarily. But it's still an interesting question.

Seth: I hear that, but right on the face, they call it the Anthropic Economic I