Did Meta's Algorithms Swing the 2020 Election?

Description

We hear it constantly: social media algorithms are driving polarization, feeding us echo chambers, and maybe even swinging elections. But what does the evidence actually say?

In the darkest version of this narrative, social media platform owners are shadow king-makers and puppet masters who can select the winner of close election by selectively promoting narratives. Amorally, they disregard the heightened political polarization and mental anxiety which are the consequence of their manipulations of the public psyche.

In this episode, we dive into an important study published in Science (How do social media feed algorithms affect attitudes and behavior in an election campaign?https://www.science.org/doi/10.1126/science.abp9364) that tackled this question. Researchers worked with Meta to experimentally change the feeds of tens of thousands of Facebook and Instagram users in the crucial months surrounding the 2020 election.One of the biggest belief swings in the history of Justified Posteriors in this one!

The Core Question: What happens when you swap out the default, engagement-optimized algorithmic feed for a simple, reverse-chronological one showing posts purely based on recency?

Following our usual format, we lay out our priors before dissecting the study's findings:

* Time Spent: The algorithmic feed kept users scrolling longer.

* Content Consumed: The types of content changed in interesting ways. The chronological feed users saw more posts from groups and pages, more political content overall, and paradoxically, more content from untrustworthy news sources.

* Attitudes & Polarization: The study found almost no effect on key measures like affective polarization (how much you dislike the other side), issue polarization, political knowledge, or even self-reported voting turnout.

So, is the panic over algorithmic manipulation overblown?

While the direct impact of this specific algorithmic ranking vs. chronological feed seems minimal on core political beliefs in this timeframe, other issues are at play:

* Moderation vs. Ranking: Does this study capture the effects of outright content removal or down-ranking (think the Hunter Biden laptop controversy)?

* Long-term Effects & Spillovers: Could small effects accumulate over years, or did the experiment miss broader societal shifts?

* Platform Power: Even if this comparison yields null results, does it mean platforms couldn't exert influence if they deliberately tweaked algorithms differently (e.g., boosting a specific figure like Elon Musk on X)?

(Transcript below)🗞️ Subscribe for upcoming episodes, post-podcast notes, and Andrey’s posts:

💻Follow us on Twitter:

@AndreyFradkin https://x.com/andreyfradkin?lang=en

@SBenzell https://x.com/sbenzell?lang=en

Transcript:Andrey: We might have naively expected that the algorithmic feed serves people their "red meat"—very far-out, ideologically matched content—and throws away everything else. But that is not what is happening.

Seth: Welcome everyone to the Justified Posterior Podcast, where we read and are persuaded by research on economics and technology so you don't have to. I'm Seth Benzell, a man completely impervious to peer influence, coming to you from Chapman University in sunny Southern California.

Andrey: And this is Andrey Fradkin, effectively polarized towards rigorous evidence and against including tables in the back of the article rather than in the middle of the text.

Seth: Amazing. And who's our sponsor for this season?

Andrey: Our sponsor for the season is the Digital Business Institute at the Questrom School of Business at Boston University. Thanks to the DBI, we're able to provide you with this podcast.

Seth: Great folks. My understanding is that they're sponsoring us because they want to see information like ours out there on various digital platforms, such as social media, right? Presumably, Questrom likes the idea of information about them circulating positively. Isn't that right?

Andrey: Oh, that's right. They want you to know about them, and by virtue of listening to us, you do. But I think, in addition, they want us to represent the ideal of what university professors should be doing: evaluating evidence and contributing to important societal discussions.

Andrey: So with that set, what are we going to be talking about today?

Seth: Well, we're talking about the concept of participating in important societal discussions itself. Specifically, we're discussing research conducted and published in Science, a prestigious journal. The research was conducted on the Facebook and Instagram platforms, trying to understand how those platforms are changing the way American politics works.

The name of the paper is, "How Do Social Media Feed Algorithms Affect Attitudes and Behavior in an Election Campaign?" by Guess et al. There are many co-authors who I'm sure did a lot of work on this paper; like many Science papers, it's a big team effort. See the show notes for the full credit – we know you guys put the hours in.

This research tries to get at the question, specifically in the 2020 election, of to what extent decisions made by Mark Zuckerberg and others about how Facebook works shaped America's politics. It's an incredibly exciting question.

Andrey: Yeah, this is truly a unique study, and we'll get into why in just a bit. But first, as you know, we need to state our prior beliefs about what the study will find. We're going to pose two claims: one narrow and one broader. Let's start with the narrow claim.

Seth: Don't state a claim, we hypothesize, Andrey.

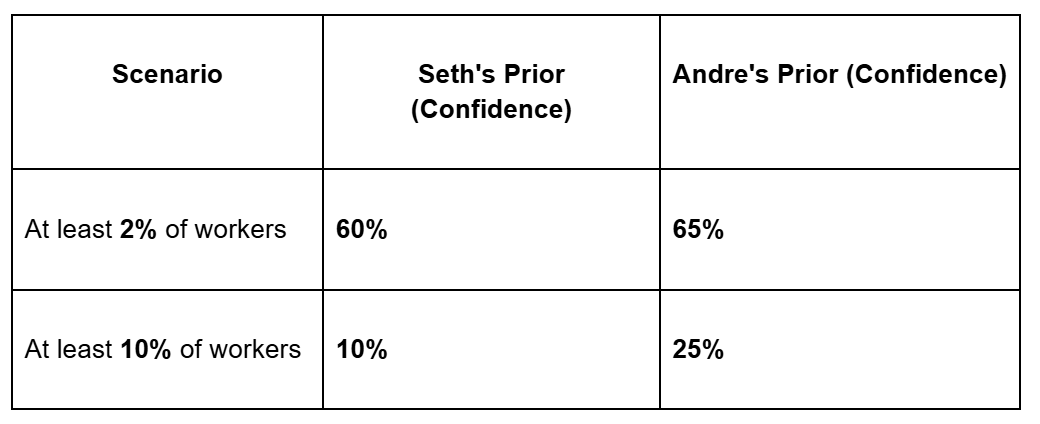

Andrey: Pardon my imprecision. A hypothesis, or question, if you will: How did the algorithmic feed on Facebook and Instagram affect political attitudes and behavior around the time of the 2020 presidential election? Seth, what is your prior?

Seth: Alright, I'm putting myself in a time machine back to 2020. It was a crazy time. The election was at the end of 2020, and the pandemic really spread in America starting in early 2020. I remember people being hyper-focused on social media because everyone was locked in their houses. It felt like a time of unusually high social media-generated peer pressure, with people pushing in both directions for the 2020 election. Obviously, Donald Trump is a figure who gets a lot of digital attention – I feel like that's uncontroversial.

On top of that, you had peak "woke" culture at that time and the Black Lives Matters protests. There was a lot of crazy stuff happening. I remember it as a time of strong populist forces and a time where my experience of reality was really influenced by social media. It was also a time when figures like Mark Zuckerberg were trying to manage public health information, sometimes heavy-handedly silencing real dissent while trying to act for public welfare.

So, that's a long wind-up to say: I'm very open to the claim that Facebook and Instagram had a thumb on the scale during the 2020 election season, broadly in favor of chaos or political polarization – BLM on one side and MAGA nationalism on the other. At the same time, maybe vaguely lefty technocratic, like the "shut up and listen to Fauci" era. Man, I actually have a pretty high prior on the hypothesis that Facebook's algorithms put a real thumb on the scale. Maybe I'll put that around two-thirds. How about you, Andrey?

Andrey: In which direction, Seth?

Seth: Towards leftiness and towards political chaos.

Andrey: And what variable represents that in our data?

Seth: Very remarkably, the paper we studied does not test lefty versus righty; they do test polarization. I don't want to spoil what they find for polarization, but my prediction was that the algorithmic feed would lead to higher polarization. That was my intuition.

Andrey: I see. Okay. My prior on this was very tiny effects.

Seth: Tiny effects? Andrey, think back to 2020. Wasn't anything about my introduction compelling? Don't you remember what it was like?

Andrey: Well, Seth, if you recall, we're not evaluating the overall role of social media. We're evaluating the role of a specific algorithm versus not having an algorithmic feed and having something else – the reverse chronological feed, which shows items in order with the newest first. That's the narrow claim we're putting a prior on, rather than the much broader question of what social media in general did.

Seth: Yeah, but I guess that connects to my censorship comments. To the extent that there is a Zuckerberg thumb on the scale, it's coming through these algorithmic weightings, or at least it can come through that.

Andrey: I think we can come back to that. My understanding of a lot of platform algorithm stuff, especially on Facebook, is that people mostly get content based on who they follow – people, groups, news outlets. The algorithm shifts those items around, but in the end, it might not be that different from a chronological feed. Experts in this field were somewhat aware of this already. That's not to say the algori