Evaluating GDPVal, OpenAI's Eval for Economic Value

Description

In this episode of Justified Posteriors podcast, Seth and Andrey discuss “GDPVal” a new set of AI evaluations, really a novel approach to AI evaluation, from OpenAI. The metric is debuted in a new OpenAI paper, “GDP Val: Evaluating AI Model Performance on Real-World, Economically Valuable Tasks.” We discuss this “bottom-up” approach to the possible economic impact of AI (which evaluates hundreds of specific tasks, multiplying them by estimated economic value in the economy of each), and contrast it with Daron Acemoglu’s “top-down” “Simple Macroeconomics of AI” paper (which does the same, but only for aggregate averages), as well as with measures of AI’s use and potential that are less directly tethered to economic value (like Anthropic's AI Economic Value Index and GPTs are GPTs). Unsurprisingly, the company pouring hundreds of billions into AI thinks that AI already can do ALOT. Perhaps trillions of dollars in knowledge work tasks annually. More surprisingly, OpenAI claims the leading Claude model is better than their own!Do we believe that analysis? Listen to find out!

Key Findings & Results Discussed

* AI Win Rate vs. Human Experts:

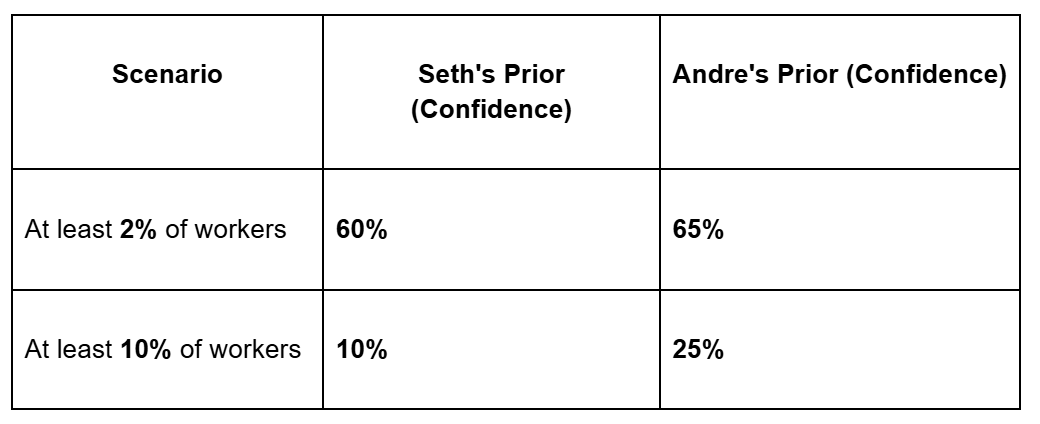

* The Prior: We went in with a prior that a generic AI (like GPT-5 or Claude) would win against a paid human expert in a head-to-head task only about 10% of the time.

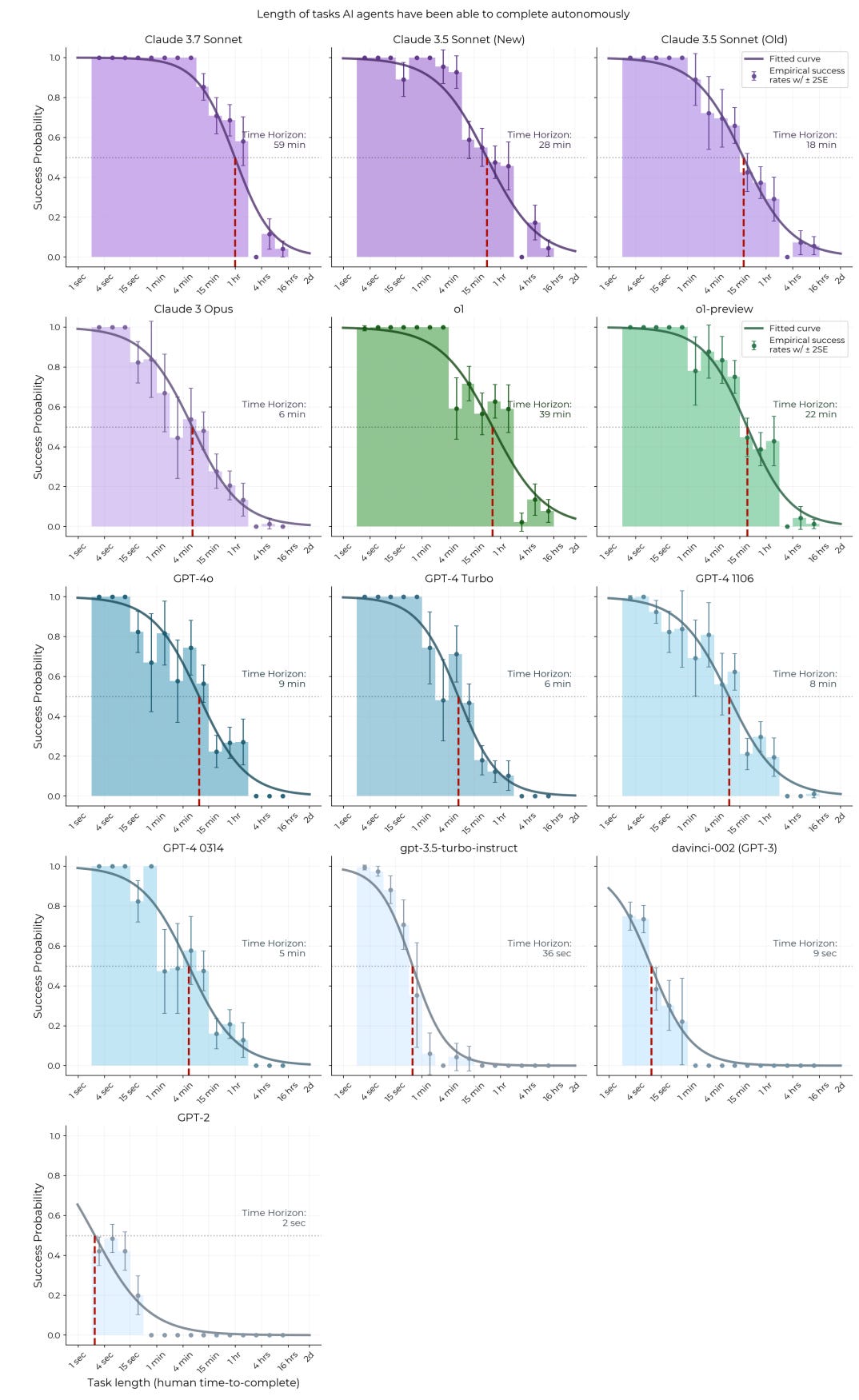

* The Headline Result: The paper found a 47.6% win rate for Claude Opus (near human parity) and a 38.8% win rate for GPT-5 High. This was the most shocking finding for the hosts.

* Cost and Speed Improvements:

* The paper provides a prototype for measuring economic gains. It found that using GPT-5 in a collaborative “N-shot” workflow (where the user can prompt it multiple times) resulted in a 39% speed improvement and a 63% cost improvement over a human working alone.

* The “Catastrophic Error” Rate:

* A significant caveat is that in 2.7% of the tasks the AI lost, it was due to a “catastrophic error,” such as insulting a customer, recommending fraud, or suggesting physical harm. This is presumed to be much higher than the human error rate.

* The “Taste” Problem (Human Agreement):

* A crucial methodological finding was that inter-human agreement on which work product was “better” was only 70%. This suggests that “taste” and subjective preferences are major factors, making it difficult to declare an objective “winner” in many knowledge tasks.

Main Discussion Points & Takeaways

* The “Meeting Problem” (Why AI Can’t Take Over):

* Andrey argues that even if AI can automate artifact creation (e.g., writing a report, making a presentation), it cannot automate the core of many knowledge-work jobs.

* He posits that much of this work is actually social coordination, consensus-building, and decision-making—the very things that happen in meetings. AI cannot yet replace this social function.

* Manager of Agents vs. “By Hand”:

* The Prior: We believed 90-95% of knowledge workers would still be working “by hand” (not just managing AI agents) in two years.

* The Posterior: We did not significantly change this belief. We distinguish between “1-shot” delegation (true agent management) and “N-shot” iterative collaboration (which they still classify as working “by hand”). We believe most AI-assisted work will be the iterative kind for the foreseeable future.

* Prompt Engineering vs. Model Size:

* We noted that the models were not used “out-of-the-box” but benefited from significant, expert-level prompt engineering.

* However, we were surprised that the data seemed to show that prompt tuning only offered a small boost (e.g., ~5 percentage points) compared to the massive gains from simply using a newer, larger, and more capable model.

* Final Posterior Updates:

* AI Win Rate: We updated our 10% prior to 25-30%. We remain skeptical of the 47.6% figure.

PS — Should our thumbnails have anime girls in them, or Andrey with giant eyes? Let us know in the comments!

Timestamps:

* (00:45 ) Today’s Topic: A new OpenAI paper (”GDP Val”) that measures AI performance on real-world, economically valuable tasks.

* (01:10 ) Context: How does this new paper compare to Acemoglu’s “Simple Macroeconomics of AI”?

* (04:45 ) Prior #1: What percentage of knowledge tasks will AI win head-to-head against a human? (Seth’s prior: 10%).

* (09:45 ) Prior #2: In two years, what share of knowledge workers will be “managers of AI agents” vs. doing work “by hand”?

* (19:25 ) The Methodology: This study uses sophisticated prompt engineering, not just out-of-the-box models.

* (25:20 ) Headline Result: AI (Claude Opus) achieves a 47.6% win rate against human experts, nearing human parity. GPT-5 High follows at 38.8%.

* (33:45 ) Cost & Speed Improvements: Using GPT-5 in a collaborative workflow can lead to a 39% speed improvement and a 63% cost improvement.

* (37:45 ) The “Catastrophic Error” Rate: How often does the AI fail badly? (Answer: 2.7% of the time).

* (39:50 ) The “Taste” Problem: Why inter-human agreement on task quality (at only 70%) is a major challenge for measuring AI.

* (53:40 ) The Meeting Problem: Why AI can’t (yet) automate key parts of knowledge work like consensus-building and coordination.

* (58:00 ) Posteriors Updated: Seth and Andrey update their “AI win rate” prior from 10% to 25-30%.

Seth: Welcome to the Justified Posteriors Podcast, the podcast that updates its priors on the economics of AI and technology. I’m Seth Benzell, highly competent at many real-world tasks, just not the most economically valuable ones, coming to you from Chapman University in sunny Southern California.

Andrey: And I’m Andrey Fradkin, making sure to never use the Unicode character 2011, since it will not render properly on people’s computers. Coming to you from,, San Francisco, California.

Seth: Amazing, Andrey. Amazing to have you here in the “state of the future.” and today we’re kind of reading about those AI companies that are bringing the future here today and are gonna, I guess, automate all knowledge work. And here they are today, with some measures about how many jobs—how much economic value of jobs—they think current generation chatbots can replace. We’ll talk about to what extent we believe those economic extrapolations. But before we go into what happens in this paper from our friends at OpenAI, do you remember one of our early episodes, that macroeconomics of AI episode we did about Daron Acemoglu’s paper?

Andrey: Well, the only thing I remember, Seth, is they were quite simple, those macroeconomics., it was the...

Seth: “Simple Macroeconomics of AI.” So you remembered the title. And if I recall correctly, the main argument of that paper was you can figure out the productivity of AI in the economy by multiplying together a couple of numbers. How many jobs can be automated? Then you multiply it by, if you automate the job, how much less labor do you need? Then you multiply that by, if it’s possible to automate, is it economically viable to automate? And you multiply those three numbers together and Daron concludes that if you implement all current generation AI, you’ll raise GDP by one percentage point. If you think that’s gonna take 10 years, he concludes that’s gonna be 0.1 additional percentage point of growth a year. You can see why people are losing their minds over this AI boom, Andrey.

Andrey: Yeah. Yeah. I mean, I, you know, I think with such so much hype, you know, they should,, they should,, probably just stop investing altogether. Is kind of right what I would think from [Eriun’s?] paper. Yeah.

Seth: Well, Andrey, why don’t I tell you, which is, the way I see this paper that we just read is that OpenAI has actually taken on the challenge and said, “Okay, you can multiply three numbers together and tell me the economic value of AI. I’m gonna multiply 200 numbers together and tell you the economic value of AI.” And in particular, rather than just try to take the sort of global aggregate of like efficiency from automation, they’re gonna go task by task by task and try to measure: Can AI speed you up? Can it do the job by itself?, this is the sort of real-world economics rubber-hits-the-road that you don’t see in macroeconomics papers.

Andrey: Yeah. Yeah. I mean, it is, it is in many ways a very micro study, but I guess micro...

Seth: Macro.

Andrey: Micro, macro. That was the best, actually my favorite.

Seth: Yeah.

Andrey: I guess maybe we should start with our prior, Seth,, before we get deeper.

Seth: Well, let’s say the name of the paper and the authors maybe.

Andrey: There are so many authors, so OpenAI... I’m sorry guys. You gotta have fewer co-authors.

Seth: We will not list the authors.

Andrey: But,, the paper is called,, “GDP Val: Evaluating AI Model Performance on Real-World, Economically V