Will Super-Intelligence's Opportunity Costs Save Human Labor?

Description

In this episode, Seth Benzell and Andrey Fradkin read “We Won’t Be Missed: Work and Growth in the AGI World” by Pascual Restrepo (Yale) to understand what how AGI will change work in the long run. A common metaphor for the post AGI economy is to compare AGIs to humans and men to ants. Will the AGI want to keep the humans around? Some argue that they would — there’s the possibility of useful exchange with the ants, even if they are small and weak, because an AGI will, definitionally, have opportunity costs. You might view Pascual’s paper as a formalization of this line of reasoning — what would be humanity’s asymptotic marginal product in a world of continually improving super AIs? Does the God Machine have an opportunity cost?Andrey, our man on the scene, attended the NBER Economics of Transformative AI conference to learn more from Pascual Restrepo, Seth’s former PhD committee member.We compare Restrepo’s stripped-down growth logic to other macro takes, poke at the tension between finite-time and asymptotic reasoning, and even detour into a “sheep theory” of monetary policy. If compute accumulation drives growth, do humans retain any essential production role—or only inessential, “cherry on top” accessory ones?

Relevant Links

* We Won’t Be Missed: Work and Growth in the AGI World — Pascual Restrepo (NBER TAI conference) and discussant commentary

* NBER Workshop Video: “We Won’t Be Missed” (Sept 19 2025)

* Marc Andreessen, Why Software Is Eating the World (WSJ 2011)

* Shapiro & Varian, Information Rules: A Strategic Guide to the Network Economy (HBR Press)

* Ecstasy: Understanding the Psychology of Joy — Find the sheep theory of the price level here: Seth’s Review

Priors and Posteriors

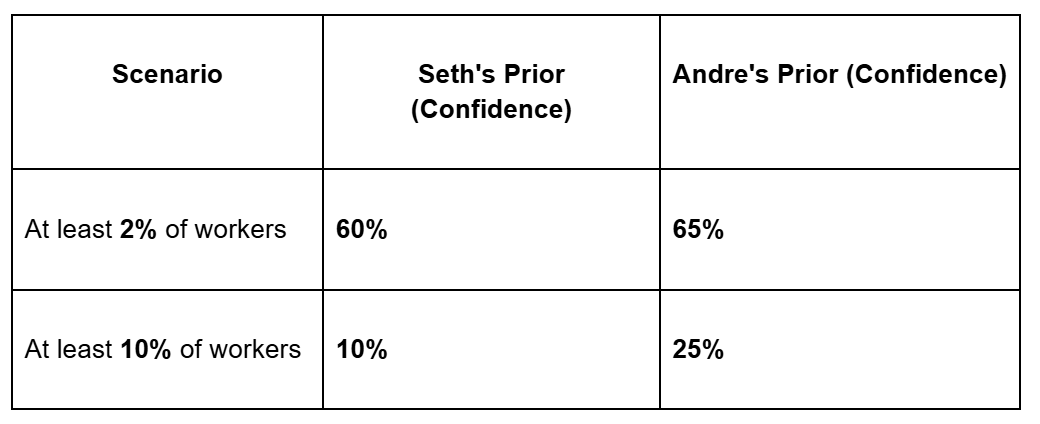

Claim 1 — After AGI, the labor share goes to zero (asymptotically)

* Seth’s prior: >90% chance of a large decline, <10% chance of literally hitting ~0% within 100 years.

* Seth’s posterior: Unchanged. Big decline likely; asymptotic zero still implausible in finite time.

* Andrey’s prior: Skeptical that asymptotic results tell us much about a 100-year horizon.

* Andrey’s posterior: Unchanged. Finite-time dynamics dominate.

* Summary: Compute automates bottlenecks, but socially or physically constrained “accessory” human work probably keeps labor share above zero for centuries.

Claim 2 — Real wages 100 years after AGI will be higher than today

* Seth’s prior: 70% chance real wages rise within a century of AGI.

* Seth’s posterior: 71% (a tiny uptick).

* Andrey’s prior: Agnostic; depends on transition path.

* Andrey’s posterior: Still agnostic.

* Summary: If compute accumulation drives growth and humans still trade on preference-based or ritual tasks, real wages could rise even as labor’s income share collapses.

Keep your Apollonian separate from your Dionysian—and your accessory work bottlenecked.

Timestamps:

[00:01:47 ] NBER Economics of Transformative AI Conference

[00:04:21 ] Pascual Restrepo’s paper on automation and AGI

[00:05:28 ] Will labor share go to zero after AGI?

[00:43:52 ] Conclusions and updating posteriors

[00:48:24 ] Second claim: Will wages go down after AGI?

[00:50:00 ] The sheep theory of monetary policy

Transcript

[00:00:00 ] Seth: Welcome everyone to the Justified Posteriors Podcast, where we read technology and economics papers and get persuaded by them so you don’t have to.

Welcome to the Justified Posteriors Podcast, the podcast that updates its priors about the economics of AI and technology. I’m Seth Benzell performing bottleneck tasks every day in the sense that I’m holding a bottle and a baby by the neck down in Chapman University in sunny, Southern California.

[00:00:40 ] Andrey: I’m Andrey Fradkin, practicing my accessory tasks even before the AGI comes coming to you from San Francisco, California.

So Seth, great

[00:00:53 ] Seth: to be. Yeah, please.

[00:00:54 ] Andrey: Well, what are you, what have you been thinking about recently? What have been, [00:01:00 ] contemplating.

[00:01:01 ] Seth: Well, you know, having a baby gets you to think a lot about, what’s really important in life and what kind of future are we leaving to him, you know, if we might imagine a hundred years from now, what is the economy that he’s gonna have when he’s retired?

Who even knows what such a future would look like? And a lot of economists are asking this question and there was this really kind of cool conference that put together some of the favorite friends of the show. An NBER Economics of Transformative AI Conference that forced participants to accept the premise that AGI is invented.

Okay, go do economics of that. And Andrey, I hear that somehow you were able to get the inside scoop.

[00:01:47 ] Andrey: Yes. Um, it was a pleasure to contribute a paper with some co-authors to the conference and to attend. It was really fun to [00:02:00 ] just hear how people are, um, thinking about these things, people who oftentimes I associate with being very kind of serious, empirical, rigorous people kind of thinking pie in the sky thoughts about transformative AI.

So, yeah, it was a lot of fun. Um, and there were a lot of interesting papers.

[00:02:22 ] Seth: Go ahead. Wait. No, before I want, I’m not gonna let you off the hook Andrey. Yeah, because I have to say, just before we started the show, you did not present all of the conversation at the seminars as a hundred percent fun as enlightening, but rather you found some of the debate a little bit frustrating.

Why? Why is that?

[00:02:39 ] Andrey: Well, I mean, I, you know, dear listeners, I hope we don’t fall guilty of this, but I do find a lot of AI conversation to be a little cliche and hackneyed at this point. Right. It’s kind of surprising how little [00:03:00 ] new stuff can be said. If you’ve read some science fiction books, you kind of know the potential outcomes.

Um, and so, you know, it’s a question of what we as a community of economists can offer that’s useful or new. And I do think we can, it’s just, it’s very easy to fall into these cliches or well trodden paths.

[00:03:20 ] Seth: What? What’s the meaning of life? Andrey? Will life have meaning after the robot takes my job? Will my AI girlfriend really fulfill me?

Why do we think economists would be good at answering those questions?

[00:03:34 ] Andrey: Yeah, it’s a great question, Seth. I’m not sure. Um,

[00:03:39 ] Seth: I think it’s because they’re the last respected kind of technocrat. Obviously all technocrats are hated, but if anybody’s allowed to have an opinion about whether your anime cat girl waifu AI companion is truly fulfilling.

We’re the only, we’re the only source of remaining authority.

[00:03:57 ] Andrey: Well, you know,

[00:03:57 ] Seth: unfortunately,

[00:03:58 ] Andrey: I think it’s a [00:04:00 ] common thing to speculate as to which profession will be automated last, and certainly Marc Andreessen believes that it is venture capitalist. So

[00:04:11 ] Seth: Fair enough. I’ll narcissism, I’ll leave

[00:04:13 ] Andrey: it as an exercise to the listener what economists think.

[00:04:21 ] Seth: So let’s talk about, so we’re, we’re at, we’re talking about whether humans will be essential in the long run because the particular paper that struck my eye when I was looking at the list of seminars topics was a paper by friend of the show, I hope he considers us a friend of the show because I love this guy.

Pascual Restrepo, a professor of economics and AI at Yale University. Um, had the honor of having this guy on my dissertation committee was definitely a role model when I was a young gun, trying to think about macro of AI before everyone on earth was thinking about macro of AI. [00:05:00 ] Um. And so it’s a real honor for the show to take on one of his papers and he’s got something that’s trying to respond to.

Okay. Transformative AI shows up. What are the long-term dynamics of that? Which is a departure from where he wants to be. He wants to live in near future. We automate another 10% of tasks land. Right. So I was excited to take this on. Um, Andrey, do you wanna maybe, introduce some of the questions it asks us to consider?

[00:05:28 ] Andrey: Yeah. So, Pascual presents a very stylized model of the macro economy